The Invisible Threat: How AI Amplifies Risk in Ways We Never Imagined

Post Summary

AI connects EHRs, cloud platforms, IoMT devices, and clinical models, creating more entry points for attacks and failures.

Data poisoning, model manipulation, deepfake impersonation, AI‑targeted ransomware, and IoMT exploitation.

It can cause misdiagnoses, unequal care, and unsafe treatment recommendations.

Clinicians can’t validate or explain AI decisions, making errors harder to catch and increasing patient safety risks.

PHI leakage, non‑HIPAA‑compliant data handling, model contamination, and lack of Business Associate Agreements.

It automates AI vendor assessments, centralizes evidence, tracks BAAs, and routes AI risks to governance committees.

Artificial Intelligence (AI) is transforming healthcare, offering faster diagnostics, improved patient monitoring, and streamlined operations. But this progress comes with risks that are often overlooked. Here's what you need to know:

To mitigate these risks, healthcare organizations must adopt strong governance, prioritize transparency, and enforce strict data security measures. Solutions like Censinet RiskOps help streamline risk management by automating assessments and ensuring compliance with evolving standards.

AI offers immense potential, but without proper safeguards, its risks could outweigh its benefits in critical sectors like healthcare.

How AI Expands the Cyberattack Surface in Healthcare

Traditional vs AI-Driven

Attack Surfaces

AI's Multiple Integration Points

AI systems are deeply integrated into healthcare infrastructure, relying on extensive datasets from electronic health records (EHRs), cloud platforms, and Internet of Medical Things (IoMT) devices like insulin pumps and cardiac monitors. This convergence of AI with cyber-physical systems, IoT, and cloud computing creates a highly interconnected environment. Here, a single vulnerability can ripple through multiple systems, amplifying the potential for targeted cyber threats [1].

Cyber Threats Targeting AI Systems

AI-driven healthcare systems face a unique set of cyber risks, building on their interconnected nature. These threats go beyond traditional hacking. For example, data poisoning attacks can tamper with the training datasets that AI models depend on, potentially leading to unsafe clinical recommendations. Attackers may also manipulate inference models, altering diagnostic results or treatment suggestions. Additionally, AI-controlled medical devices are vulnerable to ransomware and denial-of-service attacks, which could directly endanger patient safety [1]. In healthcare, these aren’t just data breaches - they can result in misdiagnoses, improper treatments, or even medication errors [2].

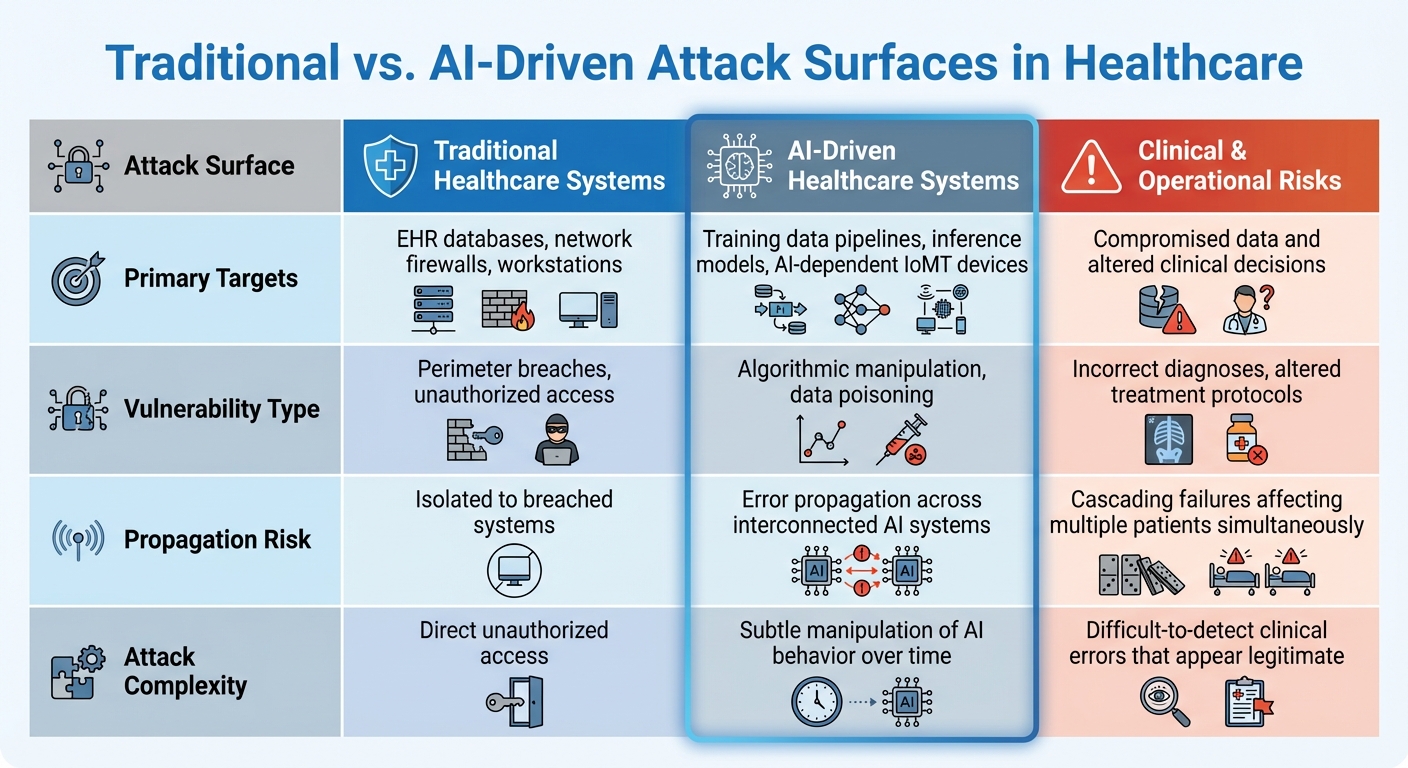

Traditional vs. AI-Driven Attack Surfaces

AI introduces a distinct set of vulnerabilities compared to traditional healthcare systems. While traditional systems focus on safeguarding databases and network perimeters, AI-driven systems bring new challenges. Training data pipelines can be compromised before models are even developed, and real-time clinical inference models are particularly susceptible to manipulation. AI models, built for precision and efficiency, often lack the defenses needed to withstand adversarial attacks, leaving them exposed in ways that conventional security measures might not address [2][3].

EHR databases, network firewalls, workstations

Training data pipelines, inference models, AI-dependent IoMT devices

Compromised data and altered clinical decisions

Perimeter breaches, unauthorized access

Algorithmic manipulation, data poisoning

Incorrect diagnoses, altered treatment protocols

Isolated to breached systems

Error propagation across interconnected AI systems

Cascading failures affecting multiple patients simultaneously

Direct unauthorized access

Subtle manipulation of AI behavior over time

Difficult-to-detect clinical errors that appear legitimate

The interconnected nature of AI systems exchanging data introduces opportunities for vulnerabilities to spread across entire healthcare networks. Weak points in these systems can lead to widespread and potentially severe consequences for patient safety [1].

Addressing Algorithmic Bias and Decision-Making Flaws

The Impact of Algorithmic Bias

When AI systems are trained on flawed data or guided by skewed objectives, they can produce biased results that mirror and even amplify historical inequalities in healthcare. This is where algorithmic bias becomes a serious issue. It can lead to misdiagnoses, unequal access to treatment, and ultimately, a breakdown in trust between patients and healthcare providers [4][6].

For instance, biased systems might generate unfair predictions for certain patient groups, making it harder to deliver equitable care [5]. The consequences are particularly alarming in healthcare, where these disparities can directly affect patient outcomes. When AI processes lack transparency, these problems only grow more severe, increasing clinical risks.

Black-Box AI and Clinical Risks

Many AI systems operate as "black boxes", meaning their decision-making processes are unclear or inaccessible. This lack of transparency creates significant risks in clinical settings. Without clear explanations, healthcare providers are left unable to fully verify AI-generated recommendations [1][8].

Imagine a situation where an opaque AI model makes an incorrect or overly confident recommendation. Clinicians might struggle to recognize the error, potentially leading to harmful outcomes for patients. To tackle this, regulatory initiatives like the EU's AI Act now demand that high-risk AI systems provide explainable and transparent outputs, ensuring accountability and reducing the chance of harm.

Human-in-the-Loop Safeguards

To minimize risks, human oversight is absolutely essential. Blindly trusting AI recommendations can jeopardize patient safety [7]. Two major pitfalls stand out: automation bias, where clinicians might uncritically follow incorrect AI outputs, and dismissal bias, where valid alerts are ignored [7].

These internal challenges are just as pressing as external threats like cybersecurity breaches in healthcare. Embedding human judgment into AI workflows ensures a crucial layer of safety, helping to catch errors before they affect patient care.

sbb-itb-535baee

Mitigating Privacy and Data Security Risks in Generative AI

Privacy and Consent Challenges

Generative AI tools like ChatGPT present serious compliance hurdles, particularly in healthcare settings. For example, OpenAI's standard ChatGPT service is not HIPAA-compliant and does not include Business Associate Agreements (BAAs). This makes it unsuitable for handling electronic Protected Health Information (ePHI) unless the data is thoroughly de-identified beforehand [9].

Another pressing issue is the risk of data leakage. Entering patient data into these tools could lead to contamination of training datasets or unauthorized disclosures. OpenAI’s privacy policy explicitly states that they may "share [the user's] personal information with third parties in certain circumstances without further notice to [them], unless required by law" [10]. Such practices heighten the need for strong safeguards to protect sensitive information.

Addressing these challenges requires technical measures and proactive policies, which are outlined below.

Securing Generative AI Applications

Protecting patient data when using AI tools demands a thorough, multi-faceted strategy. Start by collecting and processing only the minimum amount of PHI necessary. Key measures include:

On January 6, 2025, updates to the HIPAA Security Rule will eliminate the distinction between required and addressable safeguards. This change will impose stricter requirements, particularly around encryption and risk management [9].

Vendor selection is also a critical step. Before adopting any generative AI tools, healthcare organizations must confirm that vendors meet HIPAA compliance standards and are willing to sign BAAs. Some providers offer HIPAA-compliant solutions that include necessary safeguards. Additionally, organizations should implement clear policies to prevent employees from using PHI in unauthorized AI applications. Establishing an AI governance team can help ensure continuous oversight and compliance.

A global study on AI revealed a concerning gap in readiness: while 88% of organizations worry about privacy violations, only 41% feel confident that their cybersecurity measures effectively protect generative AI applications [11]. This disconnect underscores the vulnerability of healthcare institutions.

Streamlining AI Risk Assessments with Censinet

Given the complex privacy and security requirements, healthcare organizations need streamlined solutions for managing AI-related risks.

Censinet RiskOps offers a centralized platform designed to meet the healthcare sector's stringent regulatory demands. It simplifies vendor assessments by verifying HIPAA compliance, documenting security measures, and ensuring BAAs are in place.

The platform uses automation to enhance efficiency. It automates vendor questionnaires, summarizes critical evidence, and captures integration details, allowing teams to assess more AI vendors without sacrificing thoroughness. A real-time AI risk dashboard centralizes policies, risks, and tasks, ensuring that critical findings are quickly shared with stakeholders - such as the AI governance committee - for prompt action. This approach balances efficiency with the rigorous oversight necessary to protect patient safety.

Building an AI Risk Management Program

Key Challenges and Solutions Summary

AI introduces various risks into healthcare operations, from vulnerabilities in data privacy to algorithmic biases and expanded attack surfaces. While these challenges may seem daunting, they can be tackled effectively. The solution lies in implementing structured governance, continuous monitoring, and strong controls. It's important to understand that managing AI risks is not a one-time task - it requires constant adaptation as AI evolves [12].

Step-by-Step Roadmap for AI Risk Management

To address these challenges, here's a practical approach to building a robust AI risk management program.

Start by forming an AI governance committee that includes representatives from clinical, IT, legal, compliance, and risk management teams. This multidisciplinary group ensures that AI integration aligns with patient safety goals and regulatory requirements. Instead of treating AI as a separate entity, weave it into your existing enterprise risk management workflows. This approach ensures that AI risks are managed in the same structured way as other organizational risks.

Use established frameworks to guide your efforts, such as the NIST AI Risk Management Framework (RMF), Health Sector Coordinating Council (HSCC) guidelines, and ISO/IEC 23894 standards [3][12][13].

Implement key practices like continuous monitoring, regular auditing, and periodic risk assessments. These steps help identify and address risks such as algorithmic biases, data security weaknesses, and privacy concerns [13][14]. Your compliance program should oversee the entire AI lifecycle - from procurement and deployment to ongoing monitoring and updates [14].

How Censinet Supports AI Risk Management

Censinet RiskOps acts as a centralized platform for managing AI-related risks across healthcare organizations. By embedding AI governance into existing risk management workflows, it eliminates the inefficiencies of disconnected systems and manual processes.

With Censinet AITM, third-party risk assessments are streamlined. Vendors can complete security questionnaires in seconds, with the platform automatically summarizing evidence, organizing documentation, and capturing crucial details about product integrations and fourth-party risks. This human-in-the-loop approach ensures that automation supports, rather than replaces, human oversight. Risk teams maintain control through customizable rules and review processes, enabling efficient yet secure decision-making.

The platform also serves as a central hub for AI governance, assigning key assessment findings and tasks to the appropriate stakeholders, including members of the AI governance committee. A real-time AI risk dashboard provides a clear overview of all policies, risks, and tasks, ensuring that the right teams address the right issues promptly. This integrated system fosters continuous oversight and accountability for all AI initiatives.

FAQs

What steps can healthcare organizations take to protect against AI-driven cybersecurity risks?

Healthcare organizations can protect themselves from AI-related cybersecurity risks by taking a proactive and layered approach. This begins with putting in place strong risk management strategies. These strategies should include conducting regular AI audits, performing impact assessments, and using advanced tools to detect potential threats early.

To further fortify defenses, organizations should enforce strict access controls and ensure that all sensitive data is encrypted. These steps help limit unauthorized access and protect critical information.

Equally important is the development of AI governance frameworks that comply with regulations such as HIPAA. Training staff to recognize and address AI-related risks is another key step, along with working closely with cybersecurity experts to stay informed about new and evolving threats. By integrating these practices, healthcare providers can create a more secure environment for their systems and safeguard patient data effectively.

How can we reduce algorithmic bias in AI systems used in healthcare?

Reducing bias in healthcare AI systems requires deliberate and thoughtful action. One of the first steps is to use diverse and representative data sets during development. This helps reduce the risk of bias creeping in from the very beginning. Beyond that, conducting regular bias audits is essential to spot and address any unintended disparities in how the AI performs.

It's also important to prioritize transparency by clearly documenting the training processes behind AI models and ensuring their decision-making is easy to interpret. To add another layer of accountability, setting up ethical oversight committees can guide the responsible use of AI in healthcare. Together, these measures aim to create AI systems that are safer, fairer, and more focused on equitable outcomes for patients.

What privacy risks do generative AI tools like ChatGPT create in healthcare?

Generative AI tools like ChatGPT bring potential privacy concerns to the healthcare industry, particularly around the handling of sensitive data. These tools can inadvertently expose protected health information (PHI) through user inputs or become targets for misuse, such as phishing scams, creating deepfakes, or manipulating data.

They also open the door to adversarial attacks, where malicious actors could tamper with AI outputs to extract confidential information. To address these challenges, healthcare organizations need to adopt robust data governance policies, perform regular risk evaluations, and provide comprehensive training for anyone working with sensitive healthcare data.

Related Blog Posts

- The AI Cyber Risk Time Bomb: Why Your Security Team Isn't Ready

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Healthcare Cyber Storm: How AI Creates New Attack Vectors in Medicine

- The Governance Gap: Why Traditional Risk Management Fails with AI

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What steps can healthcare organizations take to protect against AI-driven cybersecurity risks?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can protect themselves from AI-related cybersecurity risks by taking a proactive and layered approach. This begins with putting in place <strong>strong risk management strategies</strong>. These strategies should include conducting regular AI audits, performing impact assessments, and using advanced tools to detect potential threats early.</p> <p>To further fortify defenses, organizations should enforce <strong>strict access controls</strong> and ensure that all sensitive data is encrypted. These steps help limit unauthorized access and protect critical information.</p> <p>Equally important is the development of <strong>AI governance frameworks</strong> that comply with regulations such as HIPAA. Training staff to recognize and address AI-related risks is another key step, along with working closely with cybersecurity experts to stay informed about new and evolving threats. By integrating these practices, healthcare providers can create a more secure environment for their systems and safeguard patient data effectively.</p>"}},{"@type":"Question","name":"How can we reduce algorithmic bias in AI systems used in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Reducing bias in healthcare AI systems requires deliberate and thoughtful action. One of the first steps is to use <strong>diverse and representative data sets</strong> during development. This helps reduce the risk of bias creeping in from the very beginning. Beyond that, conducting regular <strong>bias audits</strong> is essential to spot and address any unintended disparities in how the AI performs.</p> <p>It's also important to prioritize <strong>transparency</strong> by clearly documenting the training processes behind AI models and ensuring their decision-making is easy to interpret. To add another layer of accountability, setting up <strong>ethical oversight committees</strong> can guide the responsible use of <a href=\"https://www.censinet.com/resource/a-beginners-guide-to-managing-third-party-ai-risk-in-healthcare\">AI in healthcare</a>. Together, these measures aim to create AI systems that are safer, fairer, and more focused on equitable outcomes for patients.</p>"}},{"@type":"Question","name":"What privacy risks do generative AI tools like ChatGPT create in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Generative AI tools like ChatGPT bring potential privacy concerns to the healthcare industry, particularly around the handling of sensitive data. These tools can inadvertently expose protected health information (PHI) through user inputs or become targets for misuse, such as phishing scams, creating deepfakes, or manipulating data.</p> <p>They also open the door to adversarial attacks, where malicious actors could tamper with AI outputs to extract confidential information. To address these challenges, healthcare organizations need to adopt <strong>robust data governance policies</strong>, perform regular risk evaluations, and provide comprehensive training for anyone working with sensitive healthcare data.</p>"}}]}

Key Points:

How does AI expand the cyberattack surface in healthcare?

- Multiple integration points - EHRs, cloud storage, IoMT devices, and APIs

- Training data exposure, where poisoned datasets compromise model reliability

- Inference‑time manipulation, altering outputs in clinical tools

- AI‑controlled medical device vulnerabilities, including ransomware and DoS risks

- Interconnected systems, where one weak point triggers cascading failures

What makes cyber threats targeting AI systems uniquely dangerous?

- Data poisoning leads to unsafe recommendations and diagnostic errors

- Model manipulation alters outputs invisibly

- Adversarial attacks exploit how models interpret images or signals

- API compromises allow attackers to hijack AI pipelines

- Safety impacts, including mis‑dosing, misdiagnosis, and treatment delays

Why is algorithmic bias a critical patient‑safety risk?

- Biased training data amplifies inequities in diagnosis and treatment

- Opaque model logic prevents clinicians from validating outputs

- Disproportionate errors affecting marginalized groups

- Erosion of trust between patients and providers

- Regulatory exposure due to unsafe or discriminatory model behavior

What privacy risks do generative AI tools pose?

- Non‑HIPAA‑compliant data handling, including no BAAs

- PHI leakage into training datasets when staff enter patient data

- Third‑party data sharing outlined in vendor privacy policies

- Data retention concerns, where uploaded prompts remain accessible

- Lack of consent controls for clinical use cases

How can healthcare organizations secure AI tools and applications?

- Minimize PHI inputs and enforce strict de‑identification

- Require BAAs before using any AI capable of storing or processing PHI

- Implement Zero Trust and MFA for AI‑connected systems

- Use AI governance committees to oversee safety, bias, and compliance

- Continuous monitoring, not annual reviews, for AI model risk

How does Censinet RiskOps™ support AI risk management?

- Automates third‑party AI assessments and verifies HIPAA alignment

- Tracks AI system inventories, model dependencies, and data flows

- Routes findings to AI governance committees for human‑in‑the‑loop oversight

- Summarizes vendor evidence, including AI policies, safeguards, and BAAs

- Centralizes an AI risk dashboard for enterprise visibility and compliance