Privacy-Preserving Data Sharing in Healthcare Research

Post Summary

Privacy-preserving data sharing ensures sensitive healthcare data is shared securely while maintaining patient confidentiality and compliance with regulations.

It enables collaboration and innovation in research while protecting patient privacy and adhering to legal frameworks like HIPAA.

Challenges include balancing data utility with privacy, ensuring compliance, and implementing advanced technologies like encryption and anonymization.

Technologies like federated learning, homomorphic encryption, and differential privacy are key enablers.

It fosters innovation, accelerates discoveries, and builds trust among stakeholders by ensuring ethical data use.

The future includes advancements in AI, blockchain, and global data-sharing frameworks to enhance security and collaboration.

Sharing healthcare data for research is essential but comes with privacy challenges. Combining data from multiple institutions helps identify patterns, improve AI models, and monitor public health trends. However, risks like re-identification and data breaches make privacy protection crucial.

Key takeaways from privacy-preserving methods include:

Privacy-Preserving Methods and Technologies

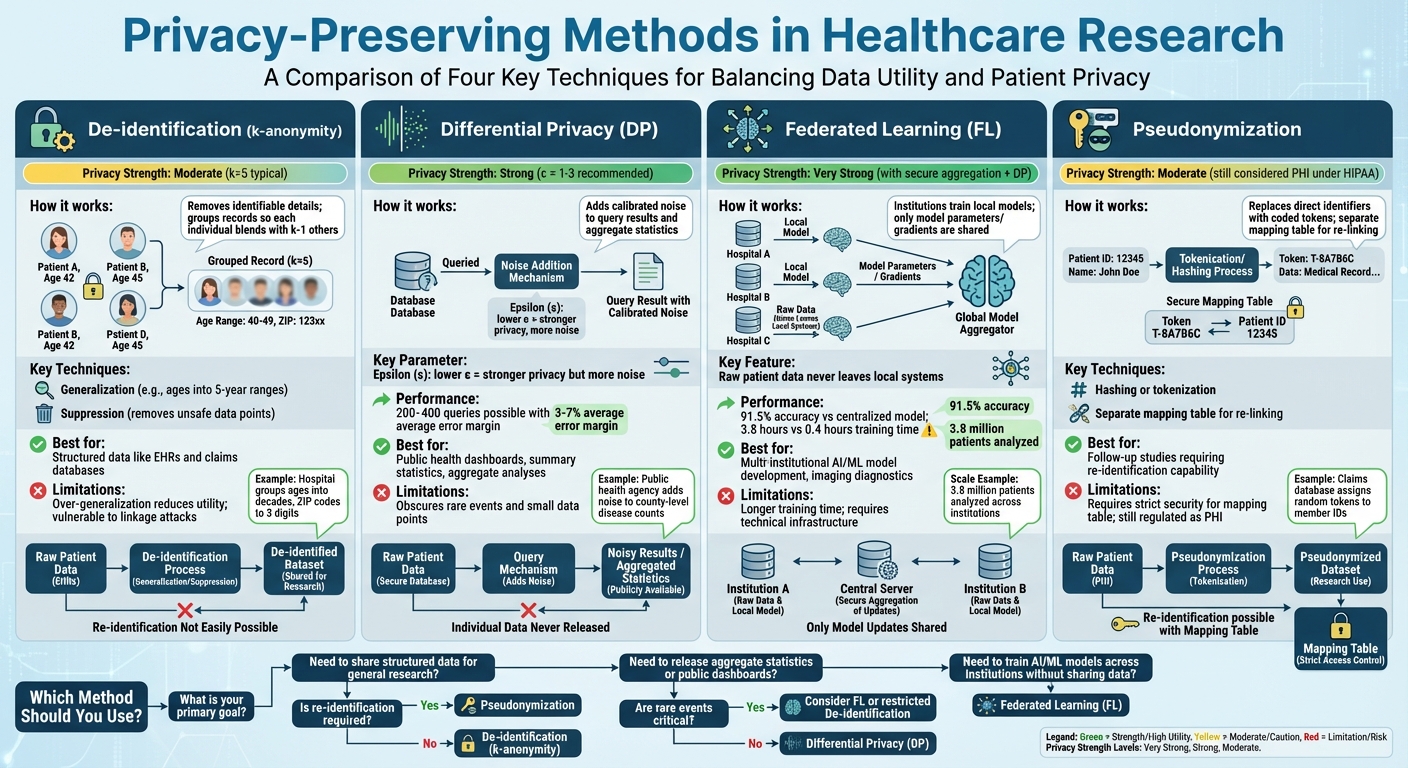

Privacy-Preserving Methods in Healthcare Research: Comparison of Techniques

De-identification and Anonymization Techniques

De-identification is a process where patient records are stripped of details that could reveal their identity, while still keeping the critical clinical data intact. A common method for this is k-anonymity, which ensures that any combination of quasi-identifiers - like age, ZIP code, or gender - appears in at least k records. This way, each individual blends into a group of at least k−1 others, making identification much harder.[4]

To achieve k-anonymity, two main techniques are used: generalization and suppression. Generalization involves replacing specific values with broader categories. For instance, exact ages might be grouped into five-year ranges (e.g., 30–34, 35–39), and ZIP codes might be truncated to the first three digits. Suppression, on the other hand, removes data points or entire records that can't be grouped safely. For example, a U.S. hospital might group ages into decades and shorten ZIP codes to three digits, ensuring equivalence classes (k=5) for data sharing.[4]

Building on k-anonymity, l-diversity and t-closeness add extra layers of protection for sensitive information like medical diagnoses. L-diversity ensures that each group contains at least l distinct values for sensitive fields, preventing scenarios where everyone in a group shares the same diagnosis. T-closeness goes even further, ensuring the distribution of sensitive attributes within a group mirrors the overall dataset, limiting what attackers can infer.[2][4]

Another method, pseudonymization, replaces direct identifiers with coded tokens, often using hashing or tokenization. A separate mapping table, kept under strict security, allows re-linking when necessary for follow-up studies. For example, a claims database might assign random tokens to member IDs while storing the re-identification key separately. Under HIPAA, pseudonymized data is still considered protected health information (PHI) if re-identification keys exist, requiring careful handling.[2][5]

While these techniques are effective for structured data like electronic health records (EHRs) or claims, they have limitations. Over-generalizing data - such as using overly broad age ranges or geographic regions - can reduce its usefulness, potentially obscuring important clinical insights. Additionally, advanced linkage attacks can still pose risks in certain scenarios.[2][4][5]

Differential Privacy for Aggregate Data

Differential privacy (DP) offers a robust way to protect individual data in aggregate analyses. It ensures that the outcome of an analysis is nearly identical whether or not a specific individual's data is included. Instead of removing identifiers, DP introduces carefully calibrated noise to query results, aggregate statistics, or model parameters.[2][3]

This method is particularly useful for sharing aggregate data, such as public health dashboards, summary statistics, or machine learning models, where repeated queries or data linkage could lead to re-identification. DP uses a parameter called epsilon (ε) to control privacy levels. A lower ε value means stronger privacy but more noise, while a higher ε value offers more accurate results but weaker privacy protections. Research shows that with ε values between 1 and 3, DP can support 200 to 400 queries while maintaining an average error margin of 3% to 7% compared to raw data.[3]

However, there’s a trade-off. Adding noise can obscure rare events or small data points, reducing precision in some analyses. For example, a public health agency might add enough noise to county-level disease counts to prevent identifying individuals in small counties, but this could also blur trends in areas with low case numbers. Organizations must carefully balance privacy needs with the accuracy required for meaningful research.

Despite these challenges, DP is becoming more common in U.S. healthcare. Its formal privacy guarantees hold even when adversaries have access to external data, making it particularly valuable for publicly shared datasets or scenarios involving repeated queries where traditional de-identification methods might fall short.[2][3]

To complement aggregate-level privacy techniques, federated learning offers another layer of protection by decentralizing data analysis.

Federated Learning and Distributed Analysis

Federated learning (FL) allows hospitals or research institutions to collaborate on training machine learning models without ever sharing raw patient data. Instead of sending sensitive data to a central server, each institution trains a local model on its own dataset. The only information shared is model parameters or gradients, which are then aggregated by a central server or peer network to create a global model. This model is sent back to the institutions for further refinement.[2][3]

This decentralized approach significantly reduces privacy risks since patient data never leaves the local systems. It’s especially useful for developing AI models across multiple institutions - like imaging diagnostics or EHR-based risk scores - where data-sharing restrictions might otherwise block collaboration. For example, a study involving five medical centers achieved 91.5% of the accuracy of a centralized model, with a training time of 3.8 hours compared to 0.4 hours for non-private training, all while maintaining complete data privacy.[3]

Federated learning also enables large-scale research. For instance, a distributed analysis involving 3.8 million patients identified adverse drug reactions that no single institution could detect on its own, all without exposing individual records. In another case, federated studies demonstrated over 90% of the predictive power of centralized models, proving that effective privacy measures don’t have to come at the cost of scientific progress.[2][3]

Modern federated systems often include additional safeguards. Secure aggregation ensures that the central server only sees combined model updates, not contributions from individual sites. Adding differential privacy to the shared gradients further reduces the risk of inferring details about specific patients from the model parameters. Together, these measures create a strong framework for multi-site research that aligns with both regulatory requirements and institutional risk management.[2][3]

Governance and Risk Management for Collaborative Research

Governance Frameworks and Data Use Agreements

Managing multi-institutional research effectively starts with having clear governance structures and formal agreements in place. In the U.S., Institutional Review Boards (IRBs) or HIPAA Privacy Boards play a critical role in reviewing research protocols that involve protected health information (PHI). These boards assess whether the research poses acceptable risks compared to its potential benefits, determine consent requirements, and ensure that data-sharing plans align with regulatory standards outlined in the Common Rule and HIPAA Privacy Rule [2].

To put these decisions into action, Data Use Agreements (DUAs) are essential. A well-crafted DUA outlines which specific data elements can be shared and how they may be used. It typically includes provisions for encryption, access restrictions, logging, and incident response protocols. When multiple institutions or vendors are involved, DUAs must align with Business Associate Agreements (BAAs) to ensure consistent responsibilities for PHI protection, subcontractor oversight, and breach notification across all parties [2][5].

Many research networks now use the "5 Safes" framework to make governance decisions more transparent. This framework includes:

This structured approach helps IRBs, compliance teams, and data stewards align on acceptable risks and control measures [2]. These governance strategies pave the way for comprehensive risk assessments, which are explored next.

Risk Assessment and Continuous Monitoring

Before starting any data-sharing initiative, organizations should conduct a detailed privacy and security risk assessment. This involves classifying the data - whether it’s identifiable or de-identified - and mapping how it flows across institutions, systems, and vendors. Key risks to consider include re-identification, unauthorized access, and potential technical vulnerabilities [2][3].

Re-identification analysis is particularly important. It identifies quasi-identifiers and evaluates their potential to single out individuals. Techniques like k-anonymity, l-diversity, and t-closeness can help determine the minimum group sizes needed to protect privacy, guiding decisions on data generalization or suppression. For aggregate outputs or query systems, threat modeling evaluates possible risks, such as reconstruction or membership inference attacks. This analysis can justify the use of safeguards like differential privacy, which adds calibrated noise, or query budgets to limit exposure [2][4].

Risk management doesn’t stop after the initial assessment. Continuous monitoring is crucial to address emerging risks. Organizations should track signals such as:

Process-related signals, like overdue access reviews, expired DUAs, or uncompleted training sessions, should also trigger intervention from governance committees. These interventions might include suspending access, tightening security controls, or revising agreements when risks exceed acceptable thresholds [2][3].

With risks clearly identified, centralized platforms can now simplify and document the ongoing risk management process.

Using Centralized Risk Operations Platforms

Traditional risk management approaches are often fragmented and inefficient. Platforms like Censinet RiskOps™ address these challenges by centralizing the management of third-party and enterprise risks, specifically for healthcare data-sharing initiatives. This platform uses structured digital questionnaires tailored to healthcare frameworks to gather security and privacy data from research partners and vendors handling PHI. It then automatically scores responses, identifying gaps in controls such as encryption, identity and access management, data segregation, and incident response capabilities.

For research networks that span academic medical centers, healthcare organizations, and technology vendors, Censinet RiskOps™ consolidates risk data from assessments, incident reports, and control attestations into role-based dashboards. These dashboards allow organizations to monitor risks by project, vendor, dataset, and system. Metrics like encryption coverage, multi-factor authentication adoption, and patching timeliness can be tracked and correlated with specific research collaborations or data-sharing agreements.

.

Baptist Health's VP & CISO, James Case, shared that adopting the platform eliminated the need for spreadsheets in risk management and provided access to a broader network of hospitals for collaboration

.

sbb-itb-535baee

Building a Privacy-Preserving Data Sharing Strategy

Selecting the Right Privacy-Preserving Method

The first step in crafting a privacy-preserving data sharing strategy is to understand your research goals and the limitations you’re working within. For example, if regulations or institutional policies restrict the transfer of raw Protected Health Information (PHI), methods like federated learning or distributed analysis could be the answer. These approaches share only model parameters or aggregated statistics, keeping individual patient records secure. On the other hand, projects that require linking patient data across sites - like longitudinal outcome studies - might need specialized techniques such as data enclaves or privacy-preserving record linkage.

The complexity of your analysis also plays a big role in your choice. For simpler tasks, such as calculating descriptive statistics, de-identified data or aggregated exchanges may suffice. However, more advanced tasks like building high-dimensional AI or machine learning models often benefit from techniques like federated learning or secure computation. Low-risk scenarios may only require HIPAA-compliant de-identification, but high-risk studies - especially those involving rare diseases or sensitive conditions - call for stronger measures, such as differential privacy or synthetic data.

One useful tool is a decision matrix that evaluates privacy-preserving methods based on factors like privacy strength, scalability, governance complexity, and cost. For instance, a multi-institutional study using secure multi-party computation across millions of records successfully identified adverse drug reactions without compromising privacy [3].

This process inevitably involves weighing the trade-offs between maintaining privacy and ensuring the data remains useful for research.

Balancing Privacy Protection with Data Utility

Privacy and data utility often pull in opposite directions - tightening privacy controls can limit the precision and scope of your research. A good starting point is to use conservative settings, such as lower privacy budgets in differential privacy or higher k-anonymity thresholds. These settings can then be adjusted incrementally to meet your specific research needs.

It’s essential to test your approach on diverse subpopulations to ensure that privacy measures don’t unintentionally obscure critical signals, especially for minority groups. For example, while you might need to preserve the accuracy of lab values or medication exposure windows, you could allow more distortion in less critical fields like fine-grained ZIP codes. Research indicates that differential privacy budgets of 1–3 can support 200–400 analytical queries while maintaining accuracy within 3–7% of results derived from raw data [3].

To assess the impact of your privacy measures, consider running parallel analyses using both the original and privacy-preserved datasets. This can help you measure any discrepancies in outcomes. Synthetic datasets, which offer lower utility but higher protection, can be useful for exploratory analyses, while tightly controlled environments can handle confirmatory research under stricter governance.

Once utility assessments are complete, layered protection controls can help reinforce your data-sharing strategy.

Implementing Layered Protection Controls

A robust data-sharing strategy doesn’t rely on a single solution - it combines technical, organizational, and legal safeguards to ensure privacy. Technical measures might include encryption, role-based access, and privacy-enhancing algorithms. Organizational safeguards could involve well-defined policies, regular training, access restrictions, and formal review processes. Legal measures, such as Data Use Agreements, Business Associate Agreements, and Institutional Review Board (IRB) oversight, ensure compliance with regulations like HIPAA.

Platforms like Censinet RiskOps™ can streamline the implementation of these layered controls. This tool centralizes risk management for multi-institutional research collaborations by using structured questionnaires tailored to healthcare frameworks. It evaluates security and privacy measures across research partners, covering areas like encryption, access management, data segregation, and incident response. For research networks that span academic medical centers and technology vendors, Censinet RiskOps™ offers role-based dashboards to monitor key metrics, such as encryption coverage, multi-factor authentication adoption, and patching timeliness, all aligned with data-sharing agreements.

Before scaling up, it’s wise to conduct a pilot study. Start small - perhaps with one or two health systems, a subset of patients, or a limited time frame. Include adversarial privacy testing, such as attempts to link records using known external datasets, to uncover vulnerabilities that standard metrics might miss. Document the results for governance bodies and refine your approach before expanding to additional sites or datasets.

Conclusion

Protecting privacy in data sharing is the backbone of trustworthy and effective healthcare research. Combining methods like de-identification, differential privacy, federated learning, and secure computation ensures strong privacy safeguards without diminishing the value of research. For instance, a multi-site outcomes study might use federated analysis to keep patient records local, while a public-facing dashboard could rely on differential privacy to secure aggregate statistics. Many leading U.S. healthcare organizations view HIPAA as a baseline, not the ultimate standard, and are implementing stronger measures to mitigate risks like re-identification, cyberattacks, and reputational harm. These technical solutions are bolstered by rigorous governance practices.

Tools like formal data use agreements, Institutional Review Board (IRB) oversight, and regular risk assessments ensure privacy protocols are consistently applied across research partnerships. Platforms such as Censinet RiskOps™ streamline this process by centralizing risk assessments, tracking security measures, and ensuring compliance for patient data, protected health information (PHI), clinical tools, and medical devices. These measures reinforce the operational strategies outlined earlier.

It’s clear that balancing privacy with data utility is not just possible - it’s essential. For example, secure multi-institution computation has been used to analyze 3.8 million patient records, uncovering adverse drug reactions that were undetectable at individual sites [3]. By carefully adjusting parameters and enforcing strict controls, healthcare research can achieve both robust privacy and meaningful insights.

Ultimately, maintaining patient trust is the cornerstone of healthcare research. Clear communication about data protection measures, coupled with strong technical and organizational safeguards, shows respect for patient autonomy while driving progress in evidence-based medicine. Privacy-preserving data sharing is not a passing concern - it’s a critical capability that will shape the future of collaborative research. By honoring ethical and legal responsibilities, healthcare systems can enhance care quality, improve safety, and deliver better outcomes for everyone.

FAQs

How does federated learning ensure patient data privacy while supporting collaborative healthcare research?

Federated learning protects patient privacy by ensuring sensitive data stays within each participating institution. Rather than sharing raw data, this method trains machine learning models on-site and exchanges only aggregated updates. This approach keeps private details, such as patient records, secure while still enabling collaboration across organizations.

With federated learning, healthcare researchers can join forces without violating privacy regulations or exposing protected health information (PHI) to unauthorized access.

How can healthcare research balance privacy with data usefulness?

Balancing privacy with the need for useful data in healthcare research is a tricky but essential task. To protect patient information, researchers often rely on methods like data anonymization, encryption, or de-identification. These techniques are effective at securing sensitive details, but they can sometimes strip away important nuances or reduce the accuracy of the data, which may affect the quality of research findings.

At the same time, sharing highly detailed datasets can significantly improve research outcomes but comes with a higher risk of privacy violations, such as the potential for patient re-identification. The challenge lies in finding the sweet spot: employing privacy-preserving methods that protect individuals' data while still allowing researchers to extract meaningful insights that push healthcare innovation forward.

How do governance frameworks help protect data and ensure compliance in multi-institutional healthcare research?

Governance frameworks are essential in safeguarding data and ensuring compliance within multi-institutional healthcare research. They define clear policies, roles, and responsibilities, creating a standardized approach to handling and sharing data across all participating organizations.

These frameworks ensure adherence to legal and regulatory standards, such as HIPAA, to protect sensitive patient information. They also include regular monitoring, audits, and risk assessments to identify and mitigate potential risks before they become issues. Tools like Censinet RiskOps™ play a key role in this process, simplifying risk management and compliance efforts. This approach not only secures collaboration among institutions but also keeps patient privacy at the forefront.

Related Blog Posts

- Balancing Privacy and Utility in Healthcare AI Data

- Federated AI Risk: Managing Machine Learning Across Distributed Systems

- HIPAA Compliance in Anonymization Protocols

- How PHI De-Identification Prevents Data Breaches

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does federated learning ensure patient data privacy while supporting collaborative healthcare research?","acceptedAnswer":{"@type":"Answer","text":"<p>Federated learning protects patient privacy by ensuring sensitive data stays within each participating institution. Rather than sharing raw data, this method trains machine learning models on-site and exchanges only aggregated updates. This approach keeps private details, such as patient records, secure while still enabling collaboration across organizations.</p> <p>With federated learning, healthcare researchers can join forces without violating privacy regulations or exposing protected health information (PHI) to unauthorized access.</p>"}},{"@type":"Question","name":"How can healthcare research balance privacy with data usefulness?","acceptedAnswer":{"@type":"Answer","text":"<p>Balancing privacy with the need for useful data in healthcare research is a tricky but essential task. To protect patient information, researchers often rely on methods like <em>data anonymization</em>, <em>encryption</em>, or <em>de-identification</em>. These techniques are effective at securing sensitive details, but they can sometimes strip away important nuances or reduce the accuracy of the data, which may affect the quality of research findings.</p> <p>At the same time, sharing highly detailed datasets can significantly improve research outcomes but comes with a higher risk of privacy violations, such as the potential for patient re-identification. The challenge lies in finding the sweet spot: employing <strong>privacy-preserving methods</strong> that protect individuals' data while still allowing researchers to extract meaningful insights that push healthcare innovation forward.</p>"}},{"@type":"Question","name":"How do governance frameworks help protect data and ensure compliance in multi-institutional healthcare research?","acceptedAnswer":{"@type":"Answer","text":"<p>Governance frameworks are essential in safeguarding data and ensuring compliance within multi-institutional healthcare research. They define clear policies, roles, and responsibilities, creating a standardized approach to handling and sharing data across all participating organizations.</p> <p>These frameworks ensure adherence to legal and regulatory standards, such as <strong>HIPAA</strong>, to protect sensitive patient information. They also include regular monitoring, audits, and risk assessments to identify and mitigate potential risks before they become issues. Tools like <em>Censinet RiskOps™</em> play a key role in this process, simplifying risk management and compliance efforts. This approach not only secures collaboration among institutions but also keeps patient privacy at the forefront.</p>"}}]}

Key Points:

What is privacy-preserving data sharing in healthcare research?

- Privacy-preserving data sharing refers to the secure exchange of sensitive healthcare data while ensuring patient confidentiality and compliance with privacy regulations. It allows researchers to collaborate without exposing identifiable patient information.

Why is privacy-preserving data sharing important in healthcare?

Importance:

- Protects patient privacy and builds trust.

- Ensures compliance with regulations like HIPAA and GDPR.

- Facilitates collaboration among researchers, leading to faster medical advancements.

What are the challenges of privacy-preserving data sharing?

Key Challenges:

- Balancing data utility with privacy protection.

- Implementing advanced technologies like encryption and anonymization.

- Navigating complex regulatory landscapes.

- Ensuring interoperability between systems and organizations.

What technologies support privacy-preserving data sharing?

Technologies include:

- Federated Learning: Allows AI models to train on decentralized data without sharing raw data.

- Homomorphic Encryption: Enables computations on encrypted data without decryption.

- Differential Privacy: Adds statistical noise to datasets to protect individual identities.

- Blockchain: Provides secure, transparent, and immutable data-sharing frameworks.

How does privacy-preserving data sharing benefit healthcare research?

Benefits:

- Accelerates medical discoveries by enabling secure collaboration.

- Builds trust among patients, researchers, and institutions.

- Reduces risks of data breaches and misuse.

- Enhances the quality and scope of research by providing access to diverse datasets.

What is the future of privacy-preserving data sharing in healthcare?

Future Outlook:

- Increased adoption of AI and machine learning for secure data analysis.

- Development of global data-sharing frameworks to standardize practices.

- Integration of blockchain for enhanced security and transparency.

- Continuous innovation in encryption and anonymization techniques.