Radiology AI Vendor Risk Management: Diagnostic Accuracy and Liability Considerations

Post Summary

Radiology AI tools promise faster image analysis and improved diagnostic support, but they also introduce risks like misdiagnoses, bias, and unclear legal accountability. To manage these risks effectively, healthcare providers must focus on:

- Vendor Evaluation: Assess AI performance using metrics like sensitivity, specificity, and bias testing. Request transparency via Model Cards detailing limitations and testing results.

- Liability Management: Establish strong contracts covering performance guarantees, data security, and compliance with regulations like HIPAA and the upcoming EU AI Act (2026).

- Human Oversight: Radiologists should validate AI outputs to ensure reliable diagnoses and mitigate errors.

- Continuous Monitoring: Track AI performance over time to detect issues like bias or accuracy drift, ensuring tools remain reliable and compliant.

How to Evaluate Radiology AI Vendors for Diagnostic Accuracy

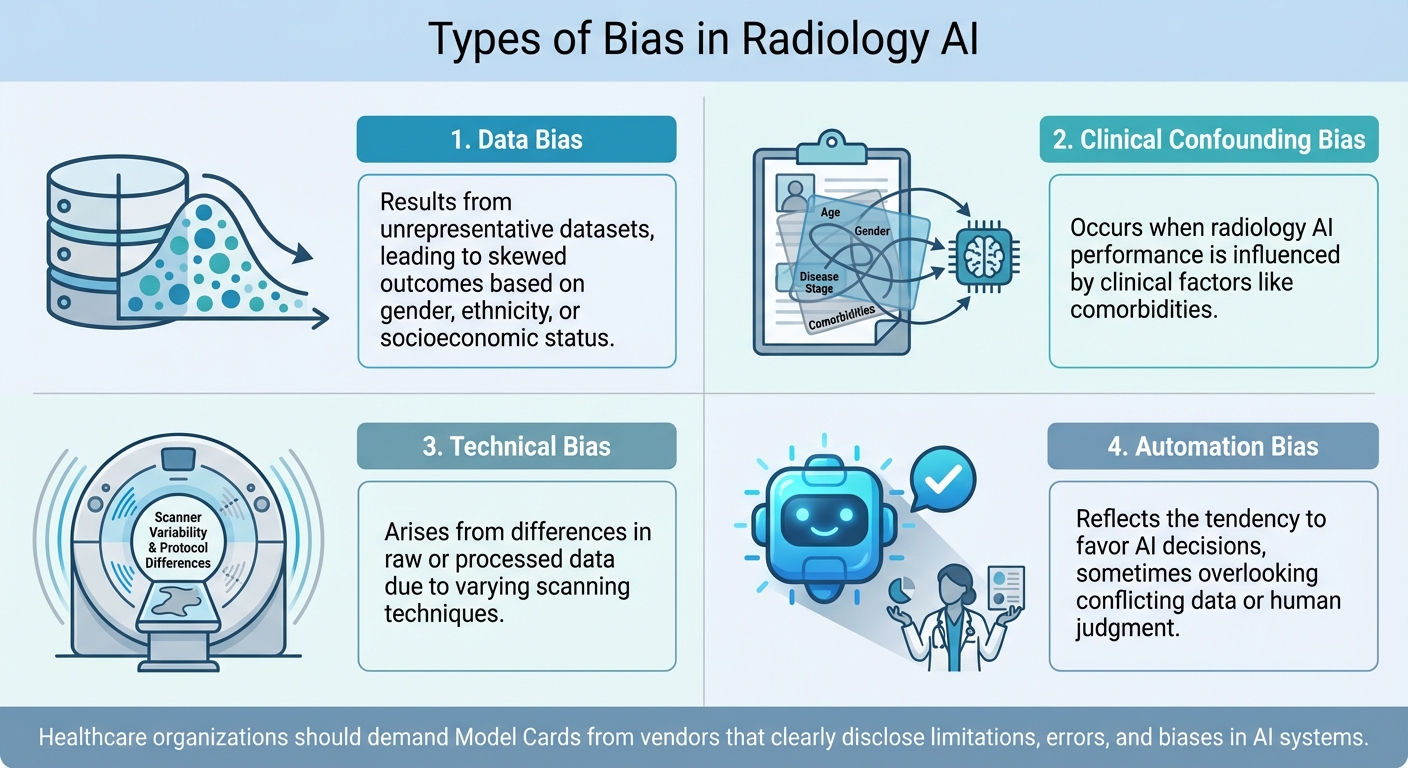

Four Types of Bias in Radiology AI Systems

AI Performance Assessment Frameworks

When assessing radiology AI vendors, structured frameworks can provide a clear path forward. One such tool is the NIST AI Risk Management Framework (AI RMF) [7][8], which offers a voluntary, lifecycle-based method to evaluate an AI system's trustworthiness. This includes examining its accuracy and how it manages bias. Additionally, the FDA has been actively involved in creating methodologies to evaluate AI algorithms, ensuring their reliability and robustness [9]. For example, in January 2025, the FDA released draft guidance featuring a Model Card. This card outlines key details like model characteristics, intended use, performance metrics, and limitations, helping to ensure AI safety and effectiveness across diverse populations [10]. These frameworks are essential for managing risks when working with radiology AI vendors.

Diagnostic Accuracy Metrics

When choosing radiology AI tools, it’s critical to focus on context-specific performance metrics like sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) [1][5]. Sensitivity measures how well the AI detects true positives (e.g., actual abnormalities), while specificity gauges its ability to correctly identify true negatives. A balanced, multi-metric evaluation tailored to the task - whether classification, detection, or segmentation - is crucial for ensuring the AI performs reliably in real-world clinical scenarios. Vendors should validate their algorithms using diverse datasets that closely reflect the patient populations the AI will serve [10]. This approach not only strengthens accuracy but also helps identify and address potential biases.

Reducing Bias in Radiology AI

Bias in radiology AI systems is a serious concern that can lead to inequitable outcomes [3][6]. The FDA defines AI bias as "a potential tendency to produce incorrect results in a systematic, but sometimes unforeseeable way, which can impact the safety and effectiveness of the device within all or a subset of the intended use population" [10]. Bias often stems from training data that fails to represent the intended patient population, human labeling errors, or inconsistencies in scanning devices and protocols [3][6].

"AI systems rely on training data, lack context and are more likely to exhibit bias if the data used to train the AI system are not representative of the patient population on which the AI system is used" [3][6].

To combat bias, healthcare organizations should demand Model Cards or System Cards from vendors. These documents should clearly disclose the limitations, errors, and biases in the AI system [3][6][10]. Transparency is key to making informed decisions about AI tools. Beyond this, organizations should ensure training data is representative, implement post-market quality assurance to monitor for concept drift (where changes in patient populations or software updates may affect performance), and maintain human oversight to catch inappropriate AI outputs.

"Addressing ethical issues in AI will require a combination of technical solutions, government activity, regulatory oversight, and ethical guidelines developed in collaboration with a wide range of stakeholders, including clinicians, patients, AI developers, and ethicists" [3][6].

| Type of Bias | Explanation |

|---|---|

| Data bias | Results from unrepresentative datasets, leading to skewed outcomes based on gender, ethnicity, or socioeconomic status [3][6]. |

| Clinical confounding bias | Occurs when radiology AI performance is influenced by clinical factors like comorbidities [3][6]. |

| Technical bias | Arises from differences in raw or processed data due to varying scanning techniques [3][6]. |

| Automation bias | Reflects the tendency to favor AI decisions, sometimes overlooking conflicting data or human judgment [3][6]. |

Managing Liability Risks in Radiology AI

Legal and Regulatory Compliance Requirements

Healthcare organizations implementing radiology AI must navigate a maze of regulatory requirements. At the core is HIPAA compliance, which governs how patient data is handled. When AI systems process this data, they create new business associate relationships, and healthcare providers remain accountable for ensuring these relationships meet HIPAA's strict standards. For those working in or serving patients within the European Union, the EU AI Act - set to take effect on August 2, 2026 - designates radiology AI tools as "high-risk AI." This classification comes with specific mandates: organizations must only use compliant AI solutions, maintain human oversight, log performance data, and conduct post-market monitoring [11]. These regulatory frameworks establish the foundation for deploying AI lawfully, but organizations also need to implement strong contractual protections to manage liability risks effectively.

Contract Protections with AI Vendors

A well-structured contract is often the first line of defense against liability. Robert Kantrowitz, Partner at Kirkland & Ellis LLP, highlights the unique challenges of negotiating contracts for AI systems:

"Negotiating contracts with AI vendors is not unlike contracting with other Software as a Service (SaaS) providers; however, certain contractual provisions become even more important when those contracts involve software that can replicate human thought processes, such as clinical support" [13].

Healthcare organizations should ensure their contracts address key areas. These include clearly defined performance metrics, remedies for non-performance (such as financial credits or termination rights), and requirements for independent third-party testing to assess accuracy and bias. Contracts should also include indemnification clauses for data breaches and intellectual property issues, secure full ownership rights over AI-generated outputs, and limit vendor access to input data, specifying clear timeframes for data retention. Business Associate Agreements (BAAs) are essential to safeguard patient data, along with provisions requiring vendors to notify healthcare organizations promptly about any "AI issues." As regulations surrounding AI evolve, contracts should include mechanisms for updates to stay aligned with emerging legal requirements [13]. But beyond contracts, operational safeguards are equally critical.

Maintaining Human Oversight in AI Diagnostics

Even with strong contracts in place, human oversight remains essential for managing liability risks in radiology AI. No matter how advanced the technology, physicians retain ultimate responsibility for diagnostic decisions and reporting. This isn't just a legal necessity - it ensures accountability and maintains the integrity of clinical care. AI tools should be treated as supplements to radiologists' expertise, offering a "second set of eyes" to identify abnormalities and reduce the risk of missed diagnoses. By validating AI outputs through their own expertise, radiologists ensure that final decisions are both informed and reliable. This collaborative approach - combining AI's efficiency with human judgment - creates a stronger diagnostic process, reinforcing the role of the medical professional in interpreting findings within the broader clinical context.

Using Censinet RiskOps for Radiology AI Risk Management

Censinet RiskOps offers tools specifically designed to address concerns about diagnostic accuracy and liability in radiology AI by simplifying risk assessments and ensuring thorough oversight.

Automating Vendor Assessments with Censinet RiskOps™

Managing radiology AI vendors involves navigating a mix of technical, clinical, and security considerations - a process that can be both complex and time-intensive. Censinet RiskOps™ transforms this challenge by automating the entire vendor risk assessment process. This approach allows healthcare organizations to evaluate AI vendors more quickly and effectively, reducing the burden on risk management teams without cutting corners.

The platform simplifies tasks like gathering and analyzing vendor security questionnaires, documentation, and evidence. For radiology AI vendors, this means assessing diagnostic accuracy claims, data handling procedures, and compliance practices in a fraction of the usual time. Importantly, the platform incorporates a human-in-the-loop approach, ensuring that risk teams stay in charge through customizable rules and review steps. Rather than replacing human judgment, automation enhances it, creating a foundation for more efficient governance and faster decision-making.

Improving Governance with Censinet Connect™

Automation is just one piece of the puzzle - effective governance is equally critical. Radiology AI governance requires input from various stakeholders, including clinical leaders, IT security teams, legal advisors, compliance officers, and AI governance committees. Censinet Connect™ acts as a central hub for collaboration, ensuring all parties have visibility into vendor risks and compliance updates.

When assessments uncover potential issues - like insufficient bias testing or vague performance metrics - Censinet Connect™ routes these findings to the appropriate team members, including AI governance representatives. This ensures that the right people address the right problems quickly. The platform also features an intuitive AI risk dashboard, offering a real-time overview of policies, risks, and tasks across the organization. By centralizing oversight, organizations can respond more effectively to emerging challenges, supported by tools designed to streamline these processes.

Accelerating Risk Management with Censinet AITM

In the fast-paced world of radiology AI, speed is crucial. As healthcare organizations adopt more AI tools, managing risks efficiently becomes even more important. Censinet AITM accelerates the third-party risk assessment process, helping organizations address risks faster without sacrificing quality.

The platform enables vendors to complete security questionnaires in seconds, automatically summarizes evidence and documentation, and captures critical details about product integration and potential fourth-party risks. For radiology AI specifically, this means understanding how products interact with existing PACS systems, what data they access, and which external services they rely on.

Censinet AITM also benchmarks vendor performance against industry standards, helping organizations identify vendors that meet acceptable levels of diagnostic accuracy, security, and compliance. With continuous monitoring workflows, the platform alerts risk teams to changes in vendor risk profiles - whether due to security incidents, regulatory updates, or shifts in AI model performance. This ongoing vigilance ensures risks are managed throughout the vendor relationship, not just during the initial assessment.

sbb-itb-535baee

Risk Mitigation and Compliance Best Practices

Once initial vendor assessments are complete, ensuring that AI systems in radiology remain secure, compliant, and effective requires ongoing risk management. This involves addressing immediate concerns while also tackling long-term governance challenges.

Conducting Security Audits of AI Vendors

Standard cybersecurity checklists simply don't cut it when evaluating radiology AI vendors. Organizations need an Enterprise Risk Management (ERM) framework specifically designed for the unique vulnerabilities of AI systems [4]. Start by defining the clinical and operational goals for each AI tool. This includes understanding its intended use cases, data needs, and success metrics, which helps shape the scope and depth of the audit [13][14][3][6].

When issuing RFPs, include detailed technical, ethical, and regulatory requirements [13]. During the evaluation process, pay close attention to the vendor's handling of data sensitivity, such as their privacy safeguards, security protocols, and intellectual property management [13]. Scrutinize their training data sources, ownership, versioning, traceability, and testing practices. Independent third-party assessments can help confirm the tool's accuracy, fairness, and representativeness [15][13]. Also, verify that the vendor has obtained the necessary regulatory approvals. Understand how the AI tool is classified - whether it's advisory, semi-automated, or fully automated - and ensure it aligns with frameworks like the EU AI Act [13][15][3][6].

Dig into the model attributes by requesting details about the type of AI model, its learning methods, potential biases, demographic fairness, autonomy levels, and the degree of human oversight required [15]. Knowing how the model was developed can reveal potential weaknesses before they impact patient care. These audit steps lay the groundwork for effective ongoing oversight.

Setting Up Continuous Monitoring Workflows

Radiology AI systems require constant attention. Real-world examples show how crucial it is to monitor these systems for performance drift and false alerts [4]. This underscores the importance of continuous monitoring to catch issues like bias, inaccuracies, or even hallucinations in AI outputs [12].

"Continuous improvement is critical to maintaining an effective risk posture." - Performance Health Partners [4]

Set up real-time risk identification systems to track AI-related risks across integration points, from diagnostic tools to billing systems [4]. Keep an eye on performance by monitoring changes in diagnostic event frequencies month-to-month and setting alerts for deviations outside normal ranges [12][3][6]. Another approach is to maintain a control sample - a fixed set of test cases regularly evaluated against the algorithm [12][3][6].

Establish error reporting protocols with clear points of contact. Require vendors to provide regular performance updates and include contract clauses that obligate them to notify customers about any discovered AI issues. Vendors should also assist in addressing these problems and notify other affected parties [13].

Oversight should span multiple departments. Medical directors and clinical leaders should monitor AI tool use through peer review programs, ensuring the tools are used appropriately and align with care standards [13]. IT teams and HIPAA oversight groups should focus on security, encryption, data storage, and access, conducting regular updates at least once a year [13]. Use incident reporting software to document and categorize issues like algorithm failures, unexpected outcomes, or patient complaints. Analyzing this data can reveal trends that guide technical updates [4]. All insights from continuous monitoring should feed into centralized AI governance dashboards.

Using AI Governance Dashboards

Centralized dashboards simplify the management of AI-related risks, policies, and compliance. By integrating AI risks into existing organizational dashboards, leadership gains the insights needed for informed decision-making [4]. These dashboards should offer a comprehensive view of AI operations, combining governance frameworks - like model development, data lineage, and performance tracking - with traditional vendor risk controls, such as security, privacy, and contract terms [15].

Dashboards help organizations quickly spot gaps or dependencies introduced by AI vendors [15]. For low-risk third-party tools, periodic spot checks can ensure their use remains appropriate [14]. Regularly updating ERM processes is crucial to keep up with the fast pace of AI advancements and evolving regulations [4]. This proactive approach ensures that radiology AI systems remain safe, effective, and compliant as technology and regulatory landscapes continue to shift [12].

Conclusion: Safe and Effective Radiology AI Deployment

Deploying radiology AI successfully requires a well-rounded Enterprise Risk Management framework that tackles the specific challenges and vulnerabilities unique to this technology [4]. This framework is the backbone of efforts to ensure accurate diagnoses and reduce liability risks.

To safeguard diagnostic precision and manage legal exposure, it's critical to thoroughly assess vendor data, model characteristics, and fairness. Additionally, strong contracts and consistent human oversight are non-negotiable. Without these measures, healthcare organizations risk implementing AI that might perform poorly for certain patient groups or produce inaccurate results, potentially leading to inappropriate medical interventions [13].

Real-time monitoring and regular vendor updates are equally important to detect performance issues or biases as they arise [4][2]. Cultivating a culture that prioritizes oversight, transparency, and accountability is key to long-term success.

Building on thorough vendor evaluations, tools like Censinet RiskOps™ streamline AI policy and risk management. This platform accelerates vendor assessments, highlights critical findings for key stakeholders, and provides an AI risk dashboard for real-time insights. These features help organizations take timely action while maintaining essential human oversight.

As AI technology continues to advance, healthcare organizations must regularly update their risk management strategies, monitoring systems, and vendor evaluations. Striking the right balance between innovation and patient safety ensures that AI's potential is harnessed responsibly, with accountability at the forefront. By staying proactive, organizations can reap AI's benefits while minimizing risks.

FAQs

How can healthcare organizations verify the accuracy of radiology AI systems?

Healthcare organizations can ensure radiology AI systems deliver accurate results by conducting thorough validation using diverse, representative datasets. This approach helps confirm that the tools function consistently across different patient groups and imaging scenarios.

After implementation, it's crucial to continuously monitor the AI's performance. Regular checks can identify and address any shifts or drift in accuracy, ensuring the system remains reliable over time. Additionally, integrating these tools into clinical workflows requires careful oversight. Routine quality assessments and close collaboration with radiologists are key to maintaining diagnostic accuracy and prioritizing patient safety.

When these measures are paired with strong risk management practices, healthcare providers can reduce errors and stay aligned with legal and regulatory requirements.

What key legal terms should be included in contracts with radiology AI vendors?

When creating contracts with radiology AI vendors, it's crucial to address key legal and operational aspects to ensure smooth collaboration and safeguard your organization. These agreements should include provisions for transparency in how the AI functions, steps for bias mitigation to promote fairness in diagnostic outcomes, and performance validation to ensure the technology aligns with established clinical standards.

The contracts should also specify data security protocols to protect sensitive information, outline ongoing monitoring duties to maintain system reliability, and ensure compliance with FDA regulations and other relevant laws. Additionally, it's vital to clearly define liability and how responsibilities are shared in cases of errors or misdiagnoses, ensuring accountability and reducing potential risks.

What steps can organizations take to reduce bias in radiology AI systems?

To make radiology AI systems more equitable, organizations should focus on using diverse and representative training datasets that mirror the wide range of patient demographics. This approach helps ensure the AI can perform accurately across different populations.

Regular audits of the AI's performance are also crucial. By examining how the system operates across various groups, disparities in diagnostic accuracy can be spotted and addressed effectively.

On top of that, incorporating bias mitigation techniques during the development process and prioritizing explainable AI models can build trust among clinicians and patients alike. These practices not only promote fairness but also enhance the reliability of AI tools in radiology.