Risk Intelligence 3.0: How Machine Learning is Redefining Risk Programs

Post Summary

Healthcare organizations face increasing cyber threats, especially in the wake of rising ransomware attacks and costly data breaches. Traditional risk management methods, like periodic assessments, fail to address these challenges effectively. Risk Intelligence 3.0, powered by machine learning, offers a better way forward by enabling real-time threat detection, compliance monitoring, and risk prioritization.

Key Takeaways:

- Why It Matters: Cybercriminals target healthcare systems, exploiting vulnerabilities in electronic health records (EHRs), medical devices, and cloud storage. Machine learning helps predict and mitigate these risks faster than manual methods.

- How It Works: AI consolidates risk data into a single dashboard, automates compliance tasks, and detects anomalies in real time - reducing incident response times by 98 days.

- Building a Strong System: Success requires clear goals, reliable data, and adherence to regulations like HIPAA and NIST frameworks.

- Predictive Analytics: Tools like BERT and XGBoost identify vulnerabilities and predict attack patterns, while models like Isolation Forest detect insider threats with over 99% accuracy.

- Third-Party Risk Management: AI evaluates vendor security, predicts risks, and ensures compliance with strict data-use policies.

Machine learning transforms risk management from reactive to proactive, helping healthcare providers safeguard patient data, prevent financial losses, and maintain compliance. Starting small with pilot projects and focusing on strong data governance can set the foundation for success.

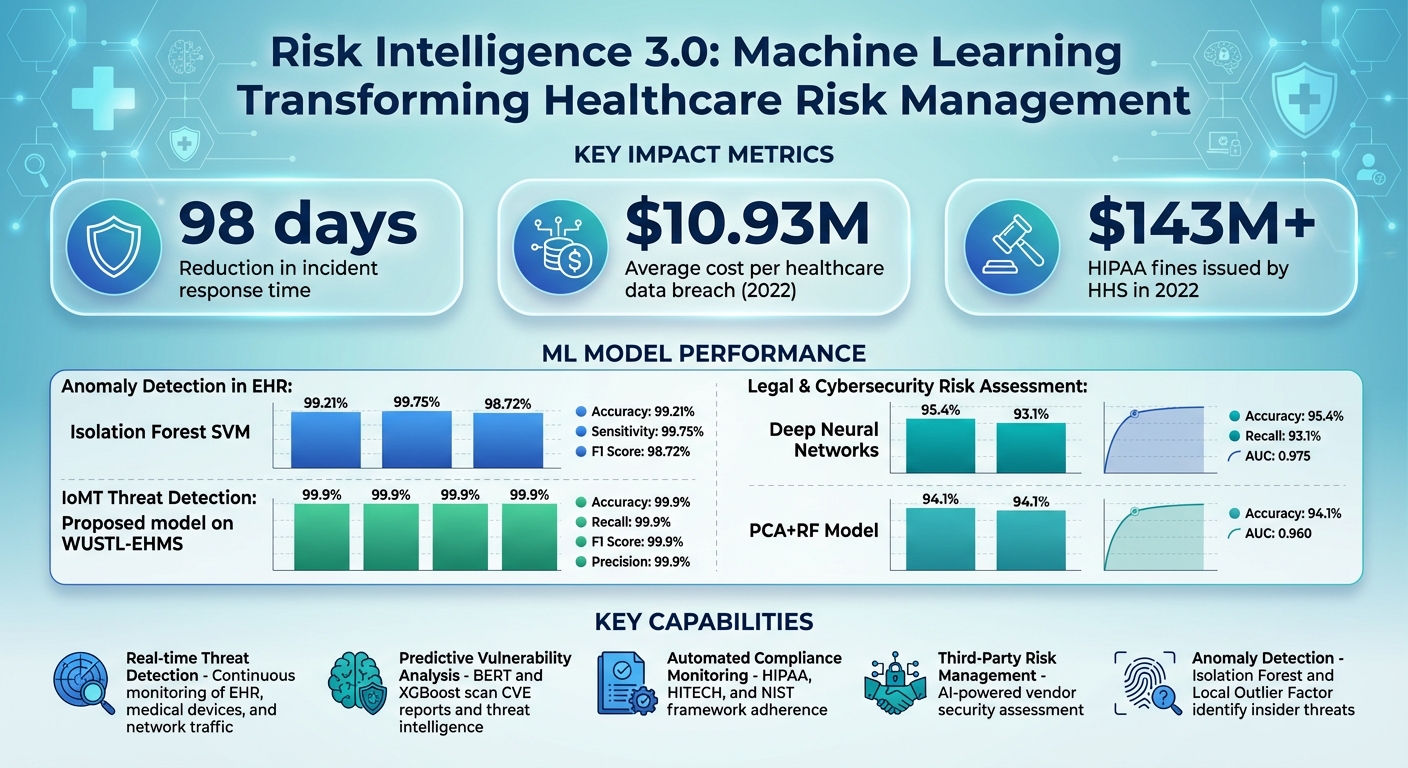

Machine Learning Impact on Healthcare Risk Management: Key Statistics and Performance Metrics

Building the Foundation for Machine Learning in Risk Programs

To establish a strong machine learning risk program, start by setting clear goals, ensuring your data is reliable, and adhering to U.S. regulations.

Setting Risk Objectives and Scope

Begin by aligning your risk objectives with your organization's priorities - such as safeguarding patient health information (PHI), ensuring uninterrupted care, and maintaining financial stability. These objectives should translate into measurable outcomes, like quicker detection of unauthorized access to electronic health records (EHR) or minimizing vulnerabilities from third-party vendors.

Next, identify and map your key assets. This includes EHR systems, medical devices (like infusion pumps and imaging equipment), cloud storage environments, and billing platforms. Understanding where your data resides and how it moves across these systems is critical. Keep an up-to-date inventory of all AI systems, documenting their roles, data dependencies, and security considerations. You might also classify AI tools using a five-level autonomy scale to determine how much human oversight each system requires based on its risk level [6]. The HSCC CWG emphasizes:

The goal is to ensure that healthcare innovation is matched by a steadfast commitment to patient safety, data privacy, and operational resilience [6].

Once your objectives are clear and your assets are mapped, the focus shifts to consolidating and preparing your data.

Preparing Data for Machine Learning

Machine learning models are only as reliable as the data they're trained on. Healthcare organizations must gather data from various sources - vulnerability scans, network telemetry, incident logs, and third-party risk assessments - to build a comprehensive view. However, this data is often scattered across departments, making it challenging to achieve an organization-wide perspective.

Centralize data from clinical, operational, and financial systems using HL7 FHIR and APIs. Data quality is critical, so regularly audit and clean your datasets to address missing values and inconsistencies [7][4]. For numerical data, such as treatment costs, apply z-score normalization to standardize scales. For unstructured text data, like diagnostic descriptions, use techniques like Term Frequency-Inverse Document Frequency (TF-IDF) to extract meaningful insights [4].

HIPAA compliance must be integrated into every aspect of your data governance. Treat AI workflows handling PHI with the same rigor as any other PHI process - use encrypted data storage, enforce role-based access controls, and maintain detailed audit logs [3]. Avoid using public or consumer-grade AI tools for PHI, and educate staff about the risks of "shadow AI" (unapproved tools) to prevent unauthorized data exposure [3].

Once your data is centralized and standardized, the next step is ensuring compliance with U.S. cybersecurity and AI frameworks.

Meeting U.S. Cybersecurity and AI Framework Requirements

Compliance with U.S. regulations is non-negotiable. It not only ensures legal adherence but also strengthens your machine learning risk program by aligning security measures with established standards. The NIST Cybersecurity Framework (CSF) offers a structured approach to identifying, protecting, detecting, responding to, and recovering from cyber threats. Meanwhile, the NIST AI Risk Management Framework (AI RMF) addresses AI-specific risks and helps implement tailored security controls [6].

Establish clear roles and clinical oversight throughout the AI lifecycle [6]. Align your AI governance practices with regulations like HIPAA, HITECH, and FDA requirements if your AI tools qualify as Software as a Medical Device (SaMD). Regularly evaluate AI workflows for vulnerabilities such as data poisoning or inference attacks, and mitigate risks based on their potential impact [3]. For third-party AI vendors, ensure contracts include strict data-use limitations, security responsibilities, and audit rights [3]. Build security into the design phase of AI systems and conduct adversarial testing to validate data integrity and detect anomalies [3].

Using Predictive Analytics for Risk Management

Predictive analytics empowers healthcare organizations to stay ahead of cybersecurity threats by analyzing patterns within their infrastructure. This allows them to anticipate vulnerabilities and pinpoint likely attack targets.

Predicting Vulnerabilities and Attack Targets

Advanced models like BERT and XGBoost scan cybersecurity news, blogs, social media, and CVE reports to spot potential threats. Natural Language Processing (NLP) techniques then step in to classify these vulnerabilities and assign severity levels automatically [9][10][11].

Supervised learning methods, such as Support Vector Machines (SVM) and Random Forests, help classify system data to uncover known weaknesses. Meanwhile, unsupervised approaches like clustering and autoencoders, along with reinforcement learning, are adept at identifying anomalies and adapting to evolving threats [11].

Once vulnerabilities are identified, they are mapped to specific assets within the Healthcare Information Infrastructure (HCII) - think electronic health record systems, medical devices, or cloud storage. This mapping provides actionable insights, enabling organizations to prioritize mitigation efforts [9][10]. Machine learning also integrates threat intelligence to predict the Tactics, Techniques, and Procedures (TTP) that attackers may use [9]. This intelligence helps translate technical vulnerabilities into measurable business risks.

Measuring and Prioritizing Business Risks

Machine learning goes beyond technical assessments by linking cybersecurity signals to financial impacts, such as downtime, breach costs, or penalties under HIPAA regulations. To ensure these models effectively prioritize risks, evaluate their performance using metrics like Accuracy, Recall, Precision, F1 Score, and Area Under the Curve (AUC) [13][4].

For spotting anomalies in electronic health records, techniques like Isolation Forest and Local Outlier Factor are highly effective in detecting insider threats or unauthorized access. A study using Isolation Forest SVM reported impressive results, achieving 99.21% accuracy, 99.75% sensitivity, and a 98.72% F1 Score in identifying EHR anomalies [13].

When assessing broader legal and cybersecurity risks, Deep Neural Networks (DNN) have shown strong results with 95.4% accuracy, 93.1% recall, and an AUC of 0.975 [4]. Similarly, the PCA+RF model also performed well, achieving 94.1% accuracy and an AUC of 0.960 [4]. These insights allow organizations to quantify which vulnerabilities pose the greatest financial risks, enabling smarter decision-making and more effective continuous monitoring strategies.

Setting Up Continuous Risk Monitoring

Static risk assessments quickly lose relevance in dynamic environments. Machine learning–driven monitoring offers a solution by adapting to new threats and changes in infrastructure. Algorithms like Isolation Forest and Local Outlier Factor are particularly useful for continuously analyzing unlabeled healthcare data, detecting anomalies that could signal insider threats or unauthorized access [13].

Feature selection techniques, such as cross-correlation and Principal Component Analysis (PCA), help reduce data volume while improving accuracy [13][4]. Dynamic risk assessment methods, enriched by threat models and scenario-based simulations, further enhance continuous monitoring, especially in Medical Internet of Things (MIoT) networks [12].

This approach minimizes response times, giving security teams the ability to act proactively. By identifying threats early, they can deploy countermeasures before attackers gain a foothold or move laterally through the network [11].

Automating Threat Detection and Response

Machine learning is reshaping how healthcare organizations handle cyber threats. By analyzing massive streams of data in real time, it identifies patterns and irregularities - like unexpected log-ins or unauthorized access to Electronic Health Records (EHRs) - that often go unnoticed by human analysts or outdated tools. This capability drastically reduces the time needed to identify incidents, cutting it down by an impressive 98 days. For security teams, this means gaining a critical edge in stopping attacks before they spiral out of control [2][14][5]. With predictive analytics laying the groundwork, the focus now shifts toward automating both threat detection and rapid response.

Detecting Anomalies in Healthcare Systems

Unsupervised machine learning algorithms are particularly skilled at spotting abnormal behaviors across healthcare systems. Tools like Isolation Forest and Local Outlier Factor stand out for their ability to detect anomalies in EHR data while keeping false positives to a minimum [13]. For Internet of Medical Things (IoMT) environments, one proposed model achieved near-perfect performance - 99.9% accuracy, recall, F1 score, and precision - when tested on the WUSTL-EHMS dataset [15]. These algorithms work around the clock, monitoring everything from network traffic to device activity and user access patterns. For instance, they can flag a medical device communicating with an unfamiliar IP address or detect a user accessing records outside of normal working hours.

Adding Machine Learning to Incident Response

When a threat is identified, machine learning can take charge of critical response actions, minimizing damage quickly. These automated responses might involve isolating compromised systems, halting the spread of ransomware, or disabling suspicious user accounts [2][14]. In healthcare, where even a few minutes of downtime can disrupt patient care, this speed is indispensable. The Health Sector Coordinating Council (HSCC) is currently developing guidance for 2026 that will address AI-related cybersecurity risks, including practical playbooks for handling detection, response, and recovery during AI-driven cyber incidents [6].

Maintaining Human Oversight in Automated Systems

While machine learning systems boast impressive accuracy, human oversight remains essential for ensuring patient safety and validating critical decisions. AI plays a pivotal role in cybersecurity, but it works best when paired with human expertise. Security teams need to scrutinize AI-driven decisions for potential biases, ethical concerns, and false positives [16]. Clear escalation protocols are crucial for high-stakes scenarios, ensuring that human judgment remains at the center of decision-making. Additionally, transparency in AI processes helps analysts understand why certain activities are flagged, making it easier to separate real threats from harmless anomalies [16]. This balanced approach ensures that automation enhances, rather than replaces, human insight.

sbb-itb-535baee

Improving Third-Party and AI Supply Chain Risk Management

Healthcare organizations often juggle a complex web of vendor relationships, each introducing potential cybersecurity risks. The traditional approach to third-party risk management - manual reviews and static questionnaires - quickly becomes unmanageable as the vendor ecosystem grows. Enter machine learning: a game-changer that replaces outdated checklists with continuous, automated risk intelligence. This technology adapts to evolving threats across the supply chain, making it a vital component of real-time risk management under the concept of Risk Intelligence 3.0. Below, we explore how machine learning enhances third-party assessments and tackles emerging AI risks.

Using Machine Learning for Third-Party Risk Assessments

Machine learning streamlines the entire process of vendor risk management, from initial assessments to ongoing monitoring and compliance checks. For example, natural language processing (NLP) can analyze security questionnaires, flagging inconsistencies and generating specific follow-up questions. This helps teams quickly identify vendors that pose higher risks. Additionally, machine learning algorithms leverage historical vendor data, past security incidents, and compliance records to predict which vendors are most likely to present future risks. This predictive power allows risk teams to prioritize their efforts where they matter most, saving time and resources [17].

Assessing AI Risks in Vendor Relationships

As more vendors incorporate AI into their offerings, assessing the associated risks becomes increasingly crucial. Key focus areas include evaluating data lineage, ensuring robust model governance, and identifying vulnerabilities to adversarial threats. Best practices advocate for securing AI components right from the start and maintaining continuous monitoring throughout the AI lifecycle. Achieving comprehensive oversight of AI and machine learning systems across the supply chain is essential for effective risk management. Contractual agreements should also clearly define responsibilities related to data security, model updates, and incident response to mitigate risks effectively.

Implementing Machine Learning Workflows with Censinet

Censinet offers an advanced solution for managing these challenges, integrating machine learning into its workflows to simplify third-party risk monitoring. Using Censinet RiskOps, organizations can centralize machine learning–driven workflows to streamline vendor risk management. Censinet AI™ further enhances this process by summarizing evidence, documenting integration details, and identifying potential fourth-party risks. This approach builds on predictive analytics and automated threat response, extending these capabilities across vendor ecosystems.

A standout feature of Censinet AI is its "human-in-the-loop" design, which allows risk teams to maintain control through customizable rules and review processes. For AI governance, Censinet AI acts as a kind of "air traffic control", directing critical findings and tasks to the appropriate stakeholders, such as members of an AI governance committee. Additionally, an intuitive AI risk dashboard consolidates real-time data, enabling swift and informed decision-making. This combination of automation and human oversight ensures a balanced and effective approach to managing third-party and AI-related risks.

Conclusion: Scaling Risk Programs with Machine Learning

Scaling risk programs with machine learning begins with evaluating the current state of risk management and addressing any technology gaps[1][18]. Setting clear and measurable objectives is crucial for driving noticeable improvements in managing risks effectively.

Machine learning transforms compliance efforts from being reactive to proactive by enabling continuous monitoring[19]. Consider this: in 2022, the U.S. Department of Health and Human Services issued over $143 million in HIPAA fines, while healthcare data breaches averaged a staggering $10.93 million per incident - the highest across all industries[20]. Automated compliance tools and real-time threat detection systems are no longer optional; they’re vital. Predictive analytics can help pinpoint vulnerabilities before they escalate, and AI-powered anonymization tools play a key role in safeguarding patient privacy during research and data analysis[20].

Starting small is often the best approach. Pilot projects allow organizations to test AI capabilities, measure their impact, and then scale up gradually[7]. Maintaining robust data governance - through regular audits and data cleaning - is essential to ensure the reliability of machine learning systems[7]. Collaboration across departments like IT, legal, and risk management is equally important for tackling technical challenges while staying compliant with regulations[7][1].

As these technologies evolve, ongoing learning and adaptation are critical. Engaging clinical teams early in the development process ensures that AI tools are both practical and meaningful in a healthcare setting[18]. Using explainable AI can help demystify algorithms, fostering trust among stakeholders[7][4]. To prevent algorithmic bias, organizations should rely on diverse datasets and implement fairness-focused practices, while regularly updating models to address emerging threats[7][8][21]. This "human-in-the-loop" approach - where automation enhances rather than replaces human decision-making - empowers healthcare organizations to scale their risk management efforts effectively, all while prioritizing patient safety and quality care delivery.

FAQs

How does machine learning enhance real-time threat detection in healthcare?

Machine learning is transforming real-time threat detection in healthcare by analyzing massive datasets to identify patterns and potential risks that conventional methods might overlook. By automating threat detection, these systems can pinpoint emerging risks and respond instantly, reducing the window of vulnerability.

What makes machine learning even more powerful is its ability to adapt. As new data comes in, it updates risk assessments on the fly. This means healthcare organizations can keep pace with ever-changing threats, safeguarding sensitive patient information and critical systems with greater efficiency.

How can healthcare organizations ensure reliable data for machine learning?

To provide dependable data for machine learning, healthcare organizations need to prioritize both data quality and security. Begin by applying thorough data validation methods and preprocessing techniques, such as denoising and normalization, to clean and standardize datasets effectively. Safeguard sensitive information with strict access controls and encryption, ensuring protection for data both at rest and during transmission.

Conducting regular risk assessments is essential to uncover potential vulnerabilities. It's equally important to evaluate the security measures of third-party vendors to minimize external risks. Establishing strong governance policies that comply with HIPAA and other relevant regulations ensures data remains secure and trustworthy throughout its entire lifecycle.

How does machine learning help manage third-party risks in healthcare?

Machine learning is transforming third-party risk management in healthcare by handling massive amounts of data to spot vulnerabilities and anticipate risks. It streamlines threat detection, cutting down on time and minimizing human errors, all while improving how risks are assessed for vendors and partners.

With these tools, healthcare organizations can bolster their security measures, stay compliant with regulations, and make smarter decisions when it comes to managing third-party relationships.