Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

Post Summary

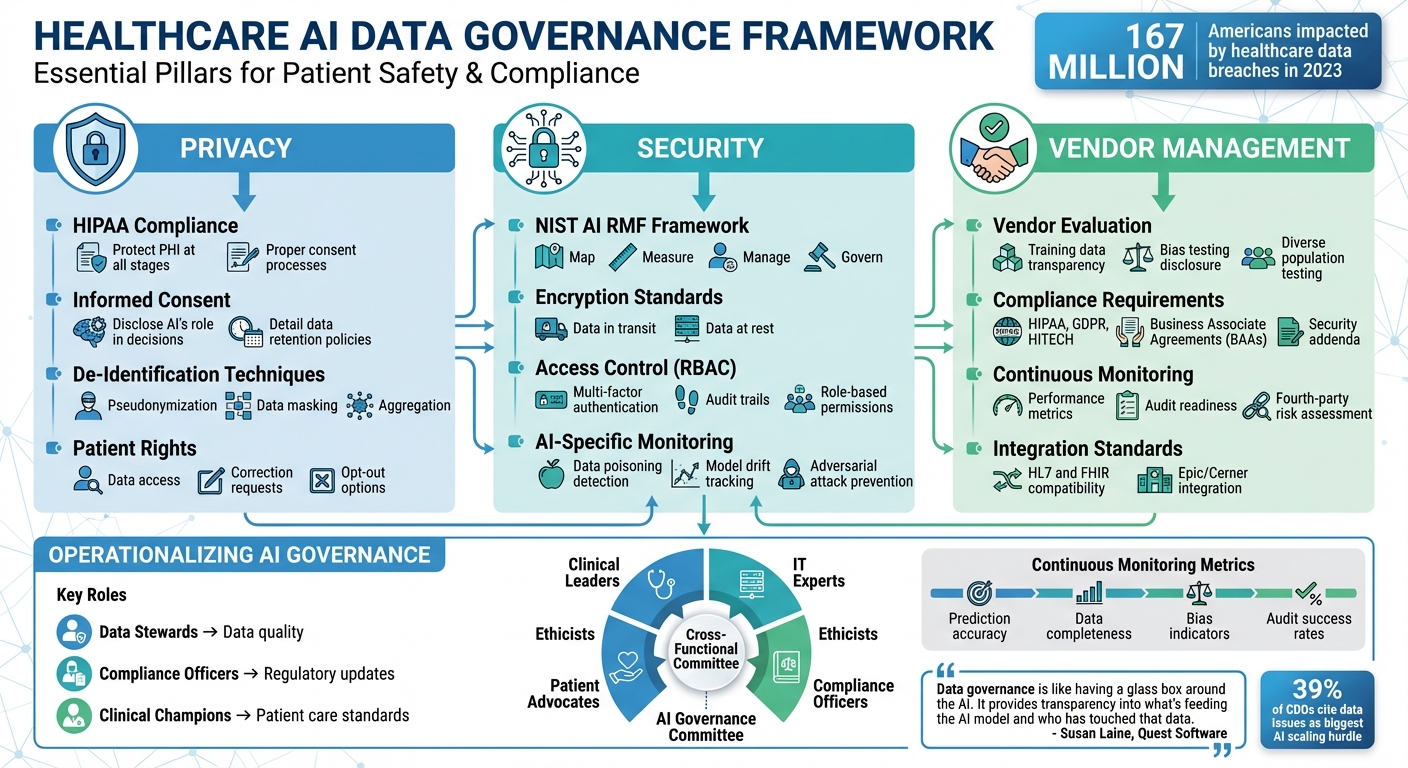

AI is transforming healthcare, but managing data for these systems is challenging. Privacy, security, and vendor management are at the core of effective AI data governance. Here's what you need to know:

- Privacy: Protect patient data by following HIPAA regulations, ensuring informed consent, and using techniques like de-identification to safeguard information.

- Security: Secure AI systems with measures like encryption, role-based access control, and AI-specific monitoring to prevent breaches and maintain reliability.

- Vendor Management: Evaluate and monitor AI vendors rigorously. Ensure compliance, transparency, and robust contracts to minimize risks from third-party tools.

With over 167 million Americans impacted by healthcare data breaches in 2023, robust governance isn't optional - it's essential to safeguard patient trust and comply with regulations. This article outlines practical strategies to manage data responsibly and securely while navigating the complexities of AI in healthcare.

Healthcare AI Data Governance Framework: Privacy, Security, and Vendor Management Best Practices

Privacy Best Practices for Healthcare AI Systems

Safeguarding patient privacy is a cornerstone of healthcare AI systems, requiring strict adherence to HIPAA standards for managing Protected Health Information (PHI). From data collection to storage and access, AI systems must handle PHI only for approved purposes, supported by proper consent and clear communication. Patients should be informed about how their data will be utilized in AI applications. Regulations like the ONC HTI-1 Rule Section (b) emphasize the importance of transparency, mandating that organizations disclose when algorithms play a role in clinical decisions.

Regulatory Compliance and Consent Models

HIPAA violations can lead to severe penalties, making it essential to integrate compliance into AI systems from the outset. Establishing informed consent processes tailored to AI use cases is critical. Patients should understand how their data will be analyzed, the extent of AI's influence on decisions, and the safeguards in place to protect their information. Consent should detail AI training, data retention policies, and any third-party data sharing arrangements. AI governance must expand on existing IT governance frameworks, incorporating medical ethics and clinical standards to ensure compliance throughout the AI system's lifecycle.

Data De-Identification and Minimization Techniques

De-identification methods are vital for protecting privacy while maintaining the utility of data for AI systems. Techniques such as pseudonymization, data masking, and aggregation help reduce privacy risks without compromising AI performance. However, de-identification is not a one-time task. As Andreea Bodnari from ALIGNMT AI Inc points out:

"Healthcare enterprises should develop or enhance their data governance policies to include objective standards for data used in AI systems. This includes ensuring data completeness, representativeness, and freedom from bias during both training and production." [2]

Regular audits are essential to ensure that de-identified data reflects diverse populations, minimizing biased outcomes. Testing AI models across various patient demographics after de-identification can help identify disparities early. To maintain transparency and support debugging, document every step of the de-identification process, including data lineage and transformations. Before deploying AI, confirm that de-identification techniques align with the use case without sacrificing accuracy.

Transparency and Patient Rights

Transparency is key to building trust in AI-driven healthcare. Organizations must provide clear notifications when AI is involved in clinical decision-making, explaining the algorithm's role and how it generates recommendations. Patients should have the right to access their data, request corrections, and understand how AI-assisted decisions may impact their care. As the WHO highlights:

"Health data governance also plays a crucial role in supporting artificial intelligence (AI) and machine learning in health, which is reliant upon access to large, high-quality datasets for training algorithms. In this context, health data governance ensures that data used for AI are ethically sourced, representative and free from bias, which helps in developing safe and reliable AI models that also enshrine gender, equity and human rights." [3]

Implementing mechanisms for patients to review how their data is used, opt out of specific AI applications when appropriate, and receive clear explanations of AI-driven recommendations is both ethical and increasingly required by regulations. These measures not only uphold patient trust but also serve as the foundation for strong security and vendor management practices in AI-powered healthcare systems.

Security Measures for Protecting AI Data and Infrastructure

Healthcare AI systems come with their own set of security challenges, going beyond standard IT protections to address vulnerabilities in areas like model training, data pipelines, and algorithmic decision-making. To meet these challenges, AI governance integrates privacy and security measures at every stage - from obtaining patient consent and de-identifying data to implementing role-based access controls and audit trails. These efforts ensure compliance with HIPAA regulations while safeguarding patient data and maintaining the reliability of AI models. Below, we’ll explore specific strategies in risk management, access control, and monitoring that help secure AI in healthcare.

NIST-Based Security Controls

The NIST AI Risk Management Framework (AI RMF) offers a voluntary structure that healthcare organizations can use to strengthen AI security. Its four core functions - map, measure, manage, and govern - help identify and address vulnerabilities unique to AI systems [1][2]. To align with HIPAA requirements, organizations should implement safeguards such as encrypting data both in transit and at rest, training staff on AI system protocols, and securing physical access to servers hosting AI models.

For example, when a hospital tested an AI system for chest X-ray interpretation in September 2025, its governance framework flagged issues early. The system produced false positives and negatives without clinical justification. The hospital immediately suspended the algorithm, conducted a root cause analysis to identify gaps in the training data, retrained the model using a more diverse dataset, and redeployed it under stricter monitoring [1]. This shows how a robust governance structure can mitigate risks and maintain system integrity.

Access Control and Identity Management

Role-based access control (RBAC) is key to ensuring that only authorized individuals can interact with AI systems and the sensitive data they handle. Healthcare organizations should require multi-factor authentication for all users, especially those with administrative privileges. Monitoring access to training data, algorithm updates, and deployment logs is also essential.

Consider the case of an AI-driven claims processing model used by a health insurer. The model, trained on historical data, unintentionally prioritized specific provider types due to biases in the legacy data. Proper access controls and detailed audit trails helped identify and address these embedded patterns, preventing further compromise of the model’s integrity [1]. These measures ensure that unauthorized changes or biases in data sources are quickly detected and corrected.

AI-Specific Security Monitoring and Incident Response

Traditional security measures need to evolve to address AI-specific threats, such as data poisoning, model drift, and adversarial attacks that can manipulate outputs. Logging all interactions with AI systems and tracking data lineage - from its source to the insights it generates - helps identify problems and supports root cause analysis [1][4].

Healthcare organizations should establish dedicated incident response protocols for AI systems. These protocols should include steps to suspend affected algorithms, investigate the underlying issues, and implement corrective actions. Continuous monitoring is also critical, as it can detect anomalies like sudden drops in prediction accuracy or unexpected decision-making patterns. Regular performance and bias assessments further help to catch vulnerabilities early, ensuring that models remain secure and compliant [1].

Vendor Management in AI Data Governance

Ensuring patient data remains secure and private is a cornerstone of AI data governance. A key part of this effort involves managing third-party AI vendors effectively. These vendors can introduce unique risks, making it essential to evaluate, contract, and monitor them diligently to maintain a secure and compliant AI ecosystem.

Evaluating AI Vendors and Contracts

When working with AI vendors, it's crucial to address the external risks they might bring. Start by requiring transparency in their processes. Vendors should clearly disclose their training data sources, explain how they test for bias, and provide details about the confidence levels of their AI models. Additionally, they should demonstrate testing across diverse patient populations to minimize disparities in outcomes.

Ensure vendors comply with regulations like HIPAA, GDPR, the HITECH Act, and relevant state laws. Contracts should include Business Associate Agreements (BAAs) to protect Protected Health Information (PHI). Security measures such as encryption standards, multi-factor authentication, and incident response protocols should be explicitly outlined in security addenda. Service Level Agreements (SLAs) should define expectations for system uptime, data availability, and performance monitoring. Also, assess whether the vendor's solution integrates smoothly with existing systems like Epic or Cerner using standards such as HL7 and FHIR.

Risk Assessment and Continuous Monitoring of Vendors

Initial evaluations are only the beginning. To maintain compliance and data integrity, vendors require ongoing risk assessment and monitoring. Track their performance with metrics like data accuracy, audit readiness, and the frequency of security incidents. Assign data stewards to oversee and enforce vendor data quality standards.

Pay attention to fourth-party risks - vendors often subcontract or depend on other technology providers, which can introduce vulnerabilities. Create robust audit trails to monitor how data is used by both AI systems and vendors, ensuring accountability at every step. As AWS Enterprise Strategist Thomas Godden notes, 39% of chief data officers pinpoint data issues as the biggest hurdle to scaling AI effectively [5].

Collaborate with vendors through working groups to establish shared terminology, access rules, and responsibilities. This proactive approach helps ensure data reliability and can identify potential issues early. Regularly reviewing governance policies is also important to stay aligned with evolving regulations and advancements in AI technology.

Using Censinet for AI Vendor Governance

Censinet RiskOps™ offers a centralized solution for managing vendor risks by automating assessments, tracking compliance, and providing real-time insights into third-party vulnerabilities. Through Censinet Connect™, the platform simplifies vendor assessments by standardizing questionnaires and documentation for all AI vendors.

Censinet AITM™ speeds up the assessment process by allowing vendors to complete security questionnaires efficiently. It also summarizes evidence, captures integration details, and evaluates fourth-party risks. The platform generates detailed risk summary reports, cutting down evaluation time while ensuring thorough oversight.

Critical findings and tasks are routed to the appropriate stakeholders, such as members of the AI governance committee. An AI risk dashboard serves as a central hub, consolidating all policies, risks, and tasks related to AI. This streamlined approach ensures teams can address issues promptly, supporting continuous monitoring and accountability across the organization.

sbb-itb-535baee

Operationalizing AI Data Governance in Healthcare

In healthcare, implementing AI data governance isn't a one-and-done task. It's about integrating governance into everyday workflows to ensure AI systems stay secure, compliant, and reliable throughout their lifecycle [1]. To achieve this, organizations need structured frameworks that make governance a natural part of their operations.

Establishing Governance Structures and Policies

Strong AI governance starts with forming cross-functional committees that include clinical leaders, IT experts, compliance officers, ethicists, and patient advocates [1]. These groups are responsible for reviewing AI projects, crafting policies, and ensuring everything aligns with both clinical needs and regulatory standards.

It's also crucial to define clear roles across the organization. For example:

- Data stewards focus on maintaining data quality.

- Compliance officers handle regulatory updates.

- Clinical champions evaluate AI tools to ensure they meet patient care standards.

This collaborative setup ensures decisions account for diverse perspectives and address potential risks. Documenting these roles and creating decision-making protocols - like escalation procedures and accountability measures - provides a clear roadmap for managing AI deployments effectively.

Monitoring AI Data and Performance Over Time

AI systems aren't static; they evolve as algorithms, data inputs, and regulations change [6]. To stay ahead, healthcare organizations need robust monitoring policies. These should include regular testing and validation of AI models to check data quality, track performance, and ensure compliance with the latest regulations. Logging AI interactions and decisions is also essential for creating audit trails that support accountability [1].

Key metrics to monitor include prediction accuracy, data completeness, and bias indicators across different patient groups. If disparities in performance arise, it's critical to investigate and address the root causes quickly. Regular assessments help identify issues like data drift or algorithm degradation, allowing organizations to refine models and inputs as needed. This ongoing oversight also strengthens vendor management practices.

Using Censinet for Continuous AI Governance

Once frameworks and monitoring practices are in place, tools like Censinet RiskOps™ can centralize AI governance efforts. This platform consolidates policies, risks, and tasks, providing governance teams with a real-time view of all AI-related activities. The centralized dashboard simplifies decision-making and ensures nothing slips through the cracks.

Censinet AI™ takes things a step further by automating key processes. For example, critical findings from AI assessments are automatically routed to the right stakeholders - whether that's the governance committee, compliance officers, or clinical leaders - for review and approval. By automating workflows while maintaining human oversight, healthcare organizations can scale their governance efforts without compromising on safety or compliance.

Conclusion and Key Takeaways

When it comes to healthcare, AI data governance isn't just a technical necessity - it's a cornerstone of patient safety, regulatory compliance, and institutional trust. The stakes are high. Breaches, hefty fines, and avoidable mistakes pose real threats to both patients and healthcare organizations. This makes it crucial to establish strong frameworks that prioritize privacy, security, and vendor accountability.

To tackle these challenges, effective AI governance requires collaboration across the board. Clinical leaders, IT experts, compliance officers, and patient advocates need to work together to create clear policies and accountability systems. Governance must be embedded at every stage - whether it's during development, procurement, deployment, or ongoing monitoring. This ensures that critical aspects like data quality, bias testing, and performance evaluations remain front and center. As Susan Laine, Chief Field Technologist at Quest Software, aptly puts it:

"Data governance is like having a glass box around the AI. It provides transparency into what's feeding the AI model and who has touched that data."

The rise of automated governance tools is changing how healthcare organizations manage AI-related risks. Platforms like Censinet RiskOps™ centralize policies, risks, and tasks, while Censinet AI™ streamlines workflows by directing assessment findings to the right individuals and offering unified dashboards. These tools enhance governance efficiency without losing the human oversight that's so critical, paving the way for gradual, targeted improvements.

Starting small can make a big difference. Focus on high-priority areas such as patient demographics or medication data, and expand from there as your organization grows its governance capacity. Establish clear data quality benchmarks, implement strict access controls, and set up continuous monitoring systems with measurable KPIs like data accuracy rates or audit success rates. By taking these steps now, you can lay the groundwork for effective privacy, security, and vendor management.

Ultimately, a well-balanced governance strategy is key to advancing AI in healthcare responsibly. The future of healthcare AI depends on frameworks that blend innovation with accountability, enabling organizations to use AI safely and effectively. By following the best practices outlined here, healthcare providers can build the trust and operational strength needed to adopt AI successfully.

FAQs

What steps can healthcare organizations take to ensure their AI systems comply with HIPAA regulations?

Healthcare organizations can meet HIPAA compliance standards for AI systems by focusing on strong data governance practices. This means putting strict access controls in place to limit who can access sensitive patient data, de-identifying information to protect patient identities, and keeping detailed audit logs to monitor system activity.

It's also crucial to have clear consent management processes, ensuring patients are fully informed about how their data will be used and agree to it. To stay compliant and maintain patient trust, these practices should be regularly reviewed and updated to align with HIPAA regulations.

What are the best practices for securing AI data in healthcare?

To keep AI data secure in healthcare, start by implementing role-based access control (RBAC). This ensures that only those with proper authorization can access sensitive information. Adding data encryption is another key step - protecting data both in transit and at rest. It's equally important to perform regular access reviews to spot and address any vulnerabilities before they become issues.

Using de-identification techniques is a smart way to protect patient privacy, removing identifiable information while still allowing data to be used effectively. Maintaining detailed audit trails adds another layer of security by promoting accountability and transparency in how data is handled. Finally, having strict data governance policies in place helps manage how data is shared, stored, and accessed, reducing risks and fostering trust in healthcare AI systems.

What are the best practices for evaluating and managing AI vendors in healthcare?

To properly assess and manage AI vendors in healthcare, providers should begin by assembling a multidisciplinary governance team. This team plays a crucial role in overseeing vendor selection and ensuring their performance aligns with the organization's standards. A primary focus should be on evaluating vendors' adherence to regulations, such as HIPAA, to safeguard the privacy and security of patient data.

When choosing AI vendors, it's important to examine factors like the safety, accuracy, and bias mitigation of their AI models. Providers should demand transparency regarding how these systems function and thoroughly verify the quality of the data being used. Additionally, routine audits and performance monitoring are essential. These measures help ensure ongoing compliance, reduce risks, and maintain confidence in the AI solutions being implemented.