Machine Learning Vendor Risk Assessment: Data Quality, Model Validation, and Compliance

Post Summary

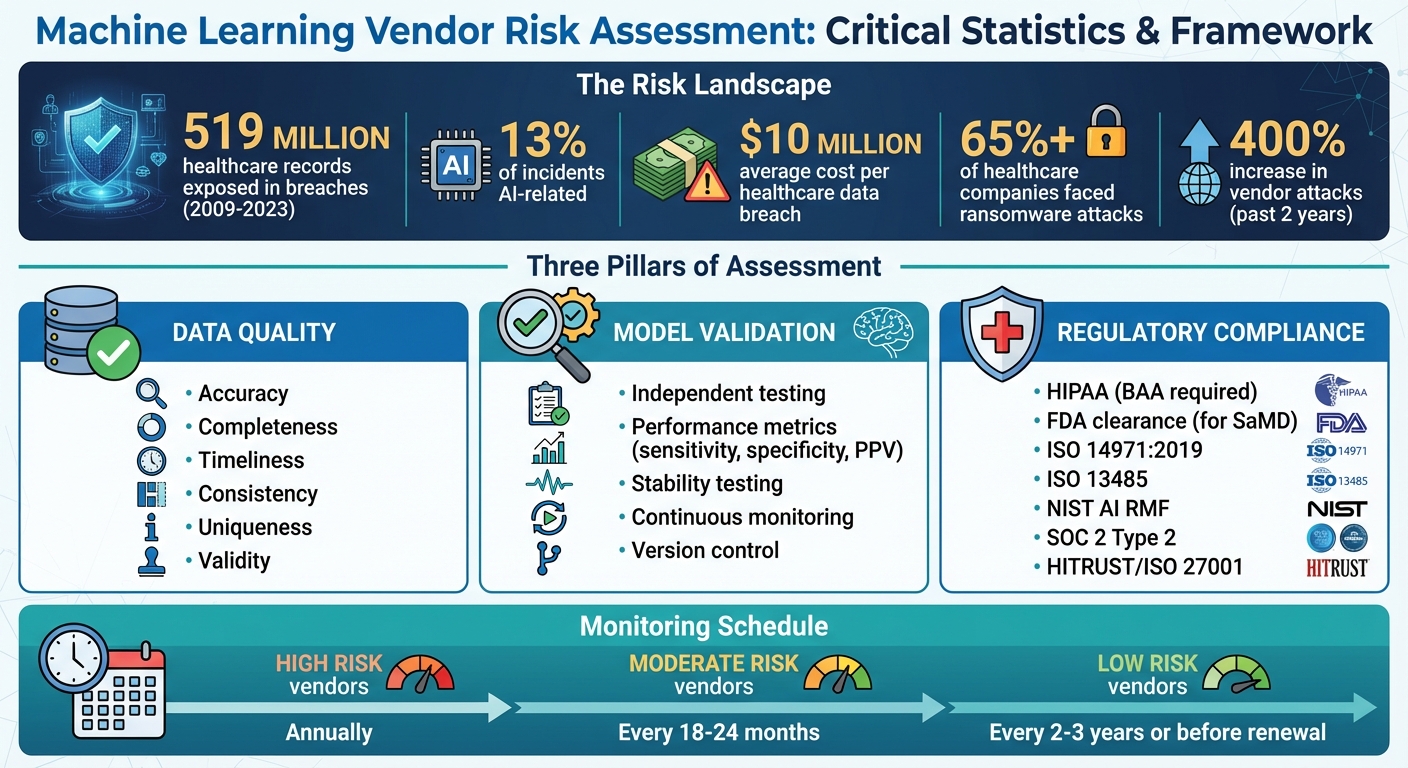

Machine learning is reshaping healthcare, but relying on third-party vendors introduces risks that can affect patient safety, data security, and compliance. Poor data quality, unvalidated models, and regulatory gaps can lead to breaches, operational failures, and legal penalties. Between 2009 and 2023, over 519 million healthcare records were exposed in breaches, with AI-related issues accounting for 13% of incidents. This article highlights the key factors for evaluating ML vendors, focusing on:

- Data Quality: Verify data sources, ensure accuracy, and reduce bias.

- Model Validation: Test models for performance, stability, and compliance with clinical standards.

- Regulatory Compliance: Ensure adherence to HIPAA, FDA guidelines, and emerging AI regulations.

Thorough vendor assessments, continuous monitoring, and structured risk management are critical to mitigating these challenges. By addressing these areas, healthcare organizations can better manage risks associated with ML systems while maintaining safety and compliance.

Healthcare ML Vendor Risk Assessment: Key Statistics and Compliance Framework

Evaluating Data Quality and Governance

Data quality problems are a major weak point in vendor-provided machine learning (ML) systems. For healthcare organizations, it's not enough to evaluate the algorithms themselves - they must also dig into the data that powers these models. Poor data governance can lead to faulty predictions, skewed results, and compliance headaches, potentially jeopardizing both patient safety and the organization’s reputation. A thorough review of data quality is crucial to ensure models are reliable and meet regulatory standards.

Verifying Data Sources and Origin

Start by identifying where the vendor’s training data comes from. Healthcare organizations should insist on full access to the training and testing datasets used by the vendor. If a vendor only provides summaries, statistics, or data dictionaries instead of the actual datasets, consider this a major red flag[5].

It’s equally important to confirm that all datasets were collected with proper patient consent, adhere to data use agreements, and comply with HIPAA regulations. Vendors must also document the entire data lifecycle, from initial collection to preprocessing and storage. This transparency ensures the data aligns with healthcare privacy and protection standards.

Measuring Data Quality and Reducing Bias

Data should be evaluated against key criteria: accuracy, completeness, timeliness, consistency, uniqueness, and validity[4].

- Accuracy ensures the data reflects real-world conditions without errors.

- Completeness means no critical fields are missing.

- Timeliness confirms the data is up-to-date with current clinical practices.

- Consistency ensures uniformity of information across systems.

- Uniqueness prevents duplicate records that could distort outcomes.

- Validity checks whether the data adheres to predefined formats and ranges.

Vendors should also be transparent about their preprocessing methods, such as how they handle missing data, detect anomalies, normalize datasets, and engineer features. These steps play a crucial role in minimizing bias[6]. Additionally, vendors must rigorously test their models on independent, representative datasets. Any gaps in this testing - or concerns about performance - should raise alarms[5]. Models should also be evaluated across diverse patient groups to identify and address disparities before deployment.

Data Governance and Access Controls

Effective data governance is just as important as data quality. Vendors need a structured governance framework that defines clear roles, responsibilities, and accountability for managing data quality and security[3]. This framework should include policies tailored to healthcare requirements and align with regulations like HIPAA[3]. Strong data segregation practices are also critical to ensure that one client’s data doesn’t influence another’s model outputs[1].

Access controls should operate on the principle of least privilege, meaning users only get access to the data they absolutely need for their role. Vendors should enforce multi-factor authentication, role-based access controls, and maintain detailed audit logs to track data access activities. Encryption must safeguard data both at rest and in transit, using industry-standard protocols. Lastly, vendors must have robust anonymization processes in place to protect patient identities, especially in the event of a breach. Testing these processes thoroughly is non-negotiable.

Validating Machine Learning Models

Validating a machine learning model is crucial to ensure it’s safe, reliable, and suitable for its intended role in healthcare. Without thorough validation, there’s a real risk of deploying systems that produce inaccurate results, fail to perform as expected, or even compromise patient care. As Charles E. Binkley and colleagues have emphasized:

Without rigorous quality assurance and continuous safety monitoring, AI systems always pose a risk of inadvertently contributing to diagnostic errors, treatment delays, or inappropriate interventions that can exacerbate existing vulnerabilities in patient care [5].

This process of validation not only strengthens the model’s reliability but also lays the groundwork for clear documentation of its purpose and limitations.

Model Documentation and Intended Use

Vendors are responsible for providing detailed documentation that defines the model's intended use, clinical applications, and boundaries. This includes information about the training data, performance metrics, and any known limitations [1]. Such transparency is essential for clinicians and IT teams to understand the model’s capabilities and the contexts in which it should or shouldn’t be used.

For instance, the documentation should specify the target patient population and the clinical scenarios where the model performs optimally. It should also highlight situations where the model is not suitable. A good example would be a model trained on adult patient data - it may not be appropriate for pediatric use unless explicitly validated for that purpose.

Testing Model Performance and Stability

To ensure the model performs as intended, it must be tested on independent, representative datasets that reflect the actual patient demographics and clinical workflows it will encounter. Key performance metrics to evaluate include sensitivity (how well the model identifies positive cases), specificity (its ability to identify negative cases), positive predictive value, and calibration statistics to ensure predicted probabilities align with real-world outcomes [5].

Stability testing is equally important. Models can degrade over time due to data drift, which occurs when patient populations, clinical practices, or data collection methods change. Vendors should be prepared to explain how they test for such scenarios, as well as for adversarial attacks or edge cases that could cause the model to fail. Service level agreements (SLAs) should cover more than just system uptime - they should also include accuracy thresholds, as a model that produces incorrect outputs is of little practical use [1].

Even after initial validation, ongoing oversight is critical to maintain the model’s performance in real-world settings.

Model Lifecycle Management and Monitoring

Once a model is deployed, the work doesn’t stop. Continuous monitoring is essential to track performance and address issues like data drift or model degradation. This involves implementing systems to monitor anomalies, assess output quality, and analyze usage patterns in real time [1]. Sudden changes in these areas may indicate security breaches, data quality issues, or performance degradation that needs immediate action.

It’s also important to document processes for version control, client notifications, and regular reassessments. For example, the FDA has proposed quality management systems and protocols for updating adaptive algorithms in healthcare [7]. These guidelines reflect the growing emphasis on managing models dynamically to keep up with evolving healthcare needs and regulatory standards.

Meeting Regulatory Compliance Requirements

Healthcare machine learning (ML) vendors operate in one of the most highly regulated environments in the U.S. Ensuring these vendors meet compliance standards is crucial - not just for protecting patient safety but also for avoiding costly penalties. Consider this: the average healthcare data breach costs nearly $10 million, and over 65% of healthcare companies have faced at least one ransomware attack [9]. Below, we’ll explore key regulatory standards and vendor practices designed to safeguard protected health information (PHI), maintain patient safety, and address emerging AI guidelines.

HIPAA Compliance and PHI Protection

Vendors handling PHI must comply with HIPAA’s Privacy and Security Rules, which apply equally to third-party associates and covered entities [9]. A critical first step is ensuring the vendor provides a Business Associate Agreement (BAA) that outlines safeguards for PHI, breach notification protocols, and data destruction policies.

However, don’t stop at the BAA. Dive deeper into the vendor’s security practices. Effective vendors will have systems in place to prevent and detect breaches, such as automated EHR log analysis, insider threat detection through pattern analysis, and workflows for breach notification and remediation [8]. Look for vendors with ongoing compliance certifications like HITRUST, SOC 2 Type 2, or ISO 27001, as these reflect continuous efforts to maintain security, rather than one-time assessments [9].

Data destruction is another critical area to scrutinize. When offboarding a vendor, ensure they take proper steps to securely destroy patient records. This often involves obtaining a signed statement confirming the destruction [9]. Additionally, regularly updating your BAA templates is essential to keep pace with evolving HIPAA requirements, especially as machine learning introduces new risks in data processing and decision-making algorithms.

FDA Requirements and Patient Safety

If a machine learning solution functions as Software as a Medical Device (SaMD) or a clinical decision support (CDS) tool, it may fall under the FDA’s oversight. These systems, while powerful, come with risks such as software bugs, algorithmic errors, and vulnerabilities in data security [10].

"Given these evolving risks, it is crucial to systematically assess and manage risks from the early stages of development through the entire product lifecycle." [10]

When assessing vendors, confirm whether their ML solutions require FDA clearance or approval. If they do, ensure they follow ISO 14971:2019, the international standard for risk management systems for medical devices [10]. This standard requires vendors to conduct risk assessments, implement controls, evaluate residual risks, and maintain detailed documentation throughout the product’s lifecycle. Additionally, compliance with ISO 13485 ensures that risk management is integrated into the vendor’s Quality Management System [10].

Ask vendors to provide evidence of a robust risk management plan. This should include clear criteria for risk acceptability, defined roles and responsibilities, and processes for monitoring real-world performance data, such as adverse events and customer feedback [10]. Continuous monitoring is essential to ensure patient safety across different clinical scenarios. By holding vendors to these rigorous standards, healthcare organizations can maintain the integrity and safety of ML-driven solutions.

Alignment with AI and Security Standards

Beyond HIPAA and FDA regulations, machine learning vendors should also align with emerging AI-specific frameworks and cybersecurity standards. The NIST AI Risk Management Framework (AI RMF) offers a structured approach to identifying, analyzing, and mitigating risks unique to AI systems [5]. This is particularly important given the staggering 400% increase in vendor attacks over the past two years [9].

During vendor evaluations, ask targeted questions about their ability to address AI-specific risks like adversarial attacks, data poisoning, and model drift. A vendor might excel in traditional cybersecurity but lack expertise in managing AI-related vulnerabilities. The goal is to ensure they meet both established healthcare regulations and the newer, AI-focused governance standards. By prioritizing security qualifications over cost, you can build a layered defense that addresses both current and emerging threats in healthcare ML solutions.

sbb-itb-535baee

Building an ML Vendor Risk Assessment Program

When it comes to managing machine learning (ML) vendors, a well-structured risk assessment program is essential. By following a consistent framework, you can evaluate, score, and monitor vendors effectively throughout their lifecycle. Without standardized processes, assessments risk becoming inconsistent, biased, or difficult to justify during audits.

Creating Vendor Questionnaires and Due Diligence Processes

Develop ML-specific questionnaires to address critical areas like data quality, model validation, and compliance with healthcare regulations. For data quality, ask vendors about their data sourcing methods, protocols for detecting bias, and documentation of data lineage. On model validation, request evidence of performance testing across diverse patient groups, documentation of intended use cases, and processes for managing model updates. For compliance, verify safeguards for HIPAA, FDA clearance status (if applicable), and adherence to frameworks like the NIST AI RMF.

Standardize the documents you request during due diligence to ensure consistent evaluations. Commonly required documents might include Business Associate Agreements, SOC 2 Type 2 reports, ISO 27001 or HITRUST certifications, model validation reports, and risk management documentation (such as ISO 14971:2019 for SaMD vendors). Evidence of ongoing compliance monitoring, like annual audits or real-time oversight, is far more reliable than one-off assessments. Where possible, consider conducting independent audits of critical vendors, as third-party reports may not always provide a complete picture. These steps create a foundation for quantifying vendor risks in a systematic way.

Scoring and Prioritizing Vendor Risks

Not every ML vendor poses the same level of risk. Build a risk-tier system that accounts for factors such as data sensitivity, the criticality of the model, and regulatory implications. For instance, a vendor offering an ML-powered clinical decision support tool that handles protected health information (PHI) and influences treatment decisions would fall into the high-risk category. On the other hand, a vendor providing administrative automation with minimal data access might be classified as moderate or low risk.

Define clear scoring criteria to ensure evaluations are unbiased and consistent. Assess vendors across multiple dimensions, such as HIPAA compliance rates, audit findings, system uptime, and the severity of any issues identified. Include performance metrics in Service Level Agreements (SLAs), like minimum system uptime for SaaS vendors or incident response time targets. This approach helps prioritize resources - high-risk vendors receive greater scrutiny, while assessments for lower-risk vendors can be streamlined. Once scored, vendors should be continuously monitored to identify any changes in their risk profile.

Monitoring and Reassessing Vendors

Vendor risk management doesn’t stop after the initial assessment. Set up a schedule for regular reassessments based on risk levels: annually for high-risk vendors, every 18 months to two years for moderate-risk vendors, and every two to three years for low-risk vendors or before contract renewal [11]. However, immediate reassessments should be triggered by red flags such as data breaches, performance issues, major system updates, or regulatory changes [11] [12].

High-risk vendors should agree to continuous monitoring of critical areas. Look for signs like declining model performance, missed SLAs, delayed security patches, or changes in the vendor’s organizational structure. Share information centrally within your organization regarding regulatory updates, vendor issues, and emerging threats. When offboarding vendors, follow a checklist to revoke access, change passwords, disable badges, remove network access, and ensure the proper destruction of patient records. This structured approach minimizes risks and keeps your organization secure.

Conclusion

Assessing the risks associated with machine learning vendors is a critical task for healthcare organizations working under HIPAA and similar regulatory frameworks [9]. These risks can range from cybersecurity threats and regulatory non-compliance to operational disruptions and even patient safety concerns. Conducting thorough vendor evaluations plays a pivotal role in managing these challenges effectively [11].

To ensure safe AI integration, there are three core areas that demand attention: data quality, model validation, and compliance. Addressing these systematically is essential for creating a strong risk management program that supports the safe and effective use of AI technologies.

Healthcare risk management is shifting from a reactive approach to one of proactive vigilance [13]. With regulatory requirements constantly evolving, it’s no longer enough to rely on one-time evaluations. Instead, organizations must adopt continuous monitoring as a cornerstone of their vendor assessment programs.

Machine learning systems are dynamic by nature. Over time, models can face issues like data drift, performance degradation, or the emergence of new biases post-deployment [2][3][5]. A model that meets validation standards today might fail tomorrow without proper oversight. Continuous monitoring is essential to catch these potential issues early, avoiding diagnostic errors, treatment delays, or inappropriate interventions that could jeopardize patient care [3][5]. This level of vigilance not only maintains model performance but also ensures vendors remain accountable.

FAQs

How can healthcare organizations verify the quality of data provided by machine learning vendors?

When working with machine learning vendors, healthcare organizations must take steps to ensure the quality of the data being provided. Start by conducting a detailed review of the vendor's data sources to confirm they meet established industry standards. Ask for comprehensive documentation outlining their data governance, accuracy protocols, and validation methods. This will help you assess whether their processes are solid and trustworthy.

To maintain data integrity over time, regular audits and consistent monitoring are critical. It’s also vital to verify that vendors adhere to healthcare regulations like HIPAA, which are designed to protect sensitive patient information and ensure a high level of quality and security in data handling.

How can machine learning models be validated for use in healthcare?

To validate machine learning models in healthcare, the first step is to focus on data quality. This includes checking for any biases that might exist in the datasets, as these can skew results and impact outcomes. Testing the model with independent datasets is crucial to assess its performance, accuracy, and reliability.

It's also important to evaluate the model's clinical relevance. This means ensuring it aligns with the practical needs and scenarios in healthcare settings, making it valuable in real-world applications. Additionally, compliance with regulatory standards like HIPAA and FDA guidelines is essential to meet both legal and ethical requirements.

After deployment, keep a close eye on the model's performance over time. Set up a change management process to handle updates or modifications efficiently, ensuring the model remains effective and trustworthy as healthcare needs evolve.

What compliance standards must machine learning vendors meet in the healthcare industry?

Machine learning vendors in healthcare have to navigate several regulations to ensure the privacy, security, and transparency of sensitive data. Key requirements include adhering to HIPAA, which protects patient health information (PHI), and following FDA regulations like 21 CFR Part 11, which governs the management of electronic records. Additionally, compliance with SOC 2 Type II ensures system security and availability. Vendors must also meet breach notification requirements under HITECH and align with state-specific laws such as the California Consumer Privacy Act (CCPA) and the Virginia Consumer Data Protection Act (CDPA).

To stay compliant, vendors should focus on secure data management practices, maintain detailed documentation of their AI systems, and implement strong privacy safeguards. Meeting these standards not only keeps healthcare data secure but also fosters trust and accountability with patients and partners.